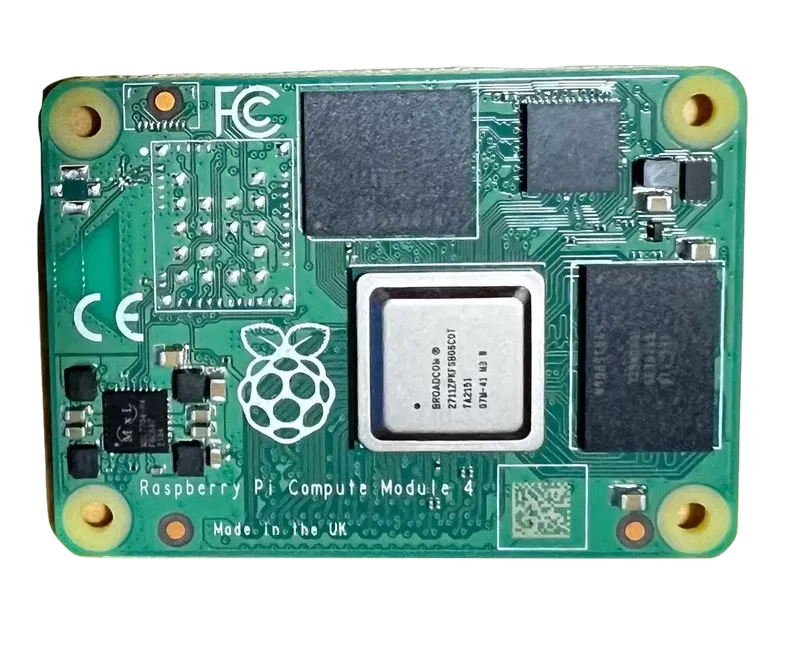

Hardware, in our modern era, does not exist in a vacuum; it requires software to function and be useful. One of main benefits of living within the Raspberry Pi ecosystem is you get up-to-date software that is maintained by a large network of source code contributors. Just for the linux kernel used in Raspberry Pi OS, there have been over 5,000 people contributing to the project. That's hundreds of thousands of lines of code added, removed and modified. Raspberry Pi is successful because of its ecosystem. It is so large, it is self-sustaining. The Raspberry Pi Compute Module 4 (announcement of the "CM4") was introduced about two years ago. Official Raspberry Pi CM4 Datasheet. It is a followup to the wildly successful Raspberry Pi 4b. The CM4 is a different form factor from the 4b. Unlike the 4b, it requires a carrier or IO board to be useful. The good news is it is compatible with a bewildering array of carrier and IO boards.

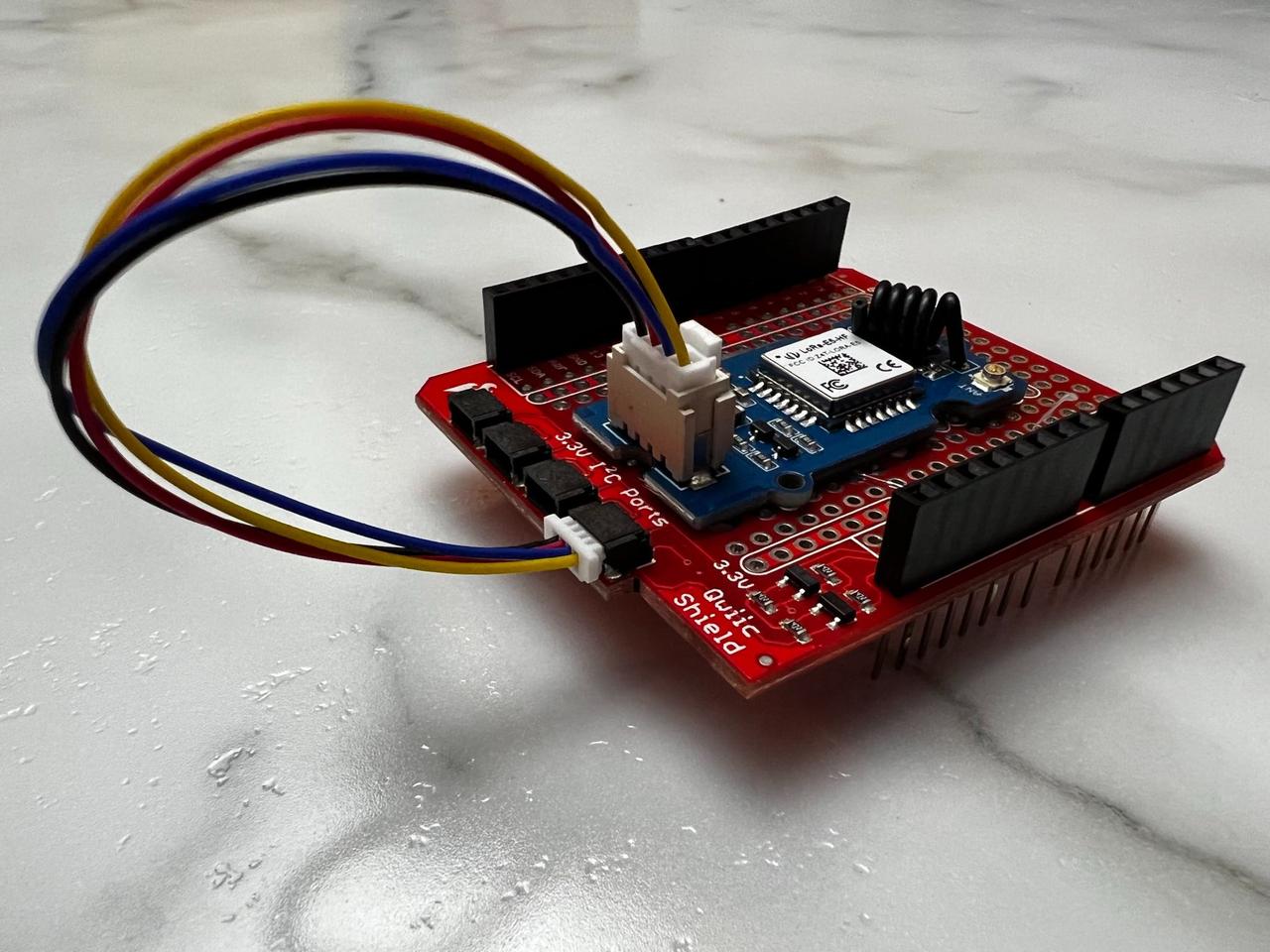

Raspberry Pi CM4

Raspberry Pi Compute Module 4, 1GB memory

There are IO boards that give you the same form factor as the RPi 4b, there are also IO boards that turn your CM4 into a KVM for a server management, there are boards with two ethernet ports -- allowing for the creation of a simple router. There also boards that expose the CM4's PCIe bus. This opens up the possibilities for using peripherals like addition network adapters or SATA controllers. More on that later.

Since the CM4's release, there have been a few pin compatible modules developed by other firms. By pin compatible, I mean that these other modules can correctly be attached via Hirose mating connectors to the IO boards.

One of the primary benefits of using a genuine Raspberry Pi CM4, as I mentioned in the first paragraph, is the ecosystem. The CM4 uses the same operating system as the 4b. This allows for nearly all the same software to be usable across the RPi family of single board computers. This sheds light on one of the most commonly brought up issues with non-Raspberry Pi single board computers: the software ecosystem just is not as robust as Raspberry Pi. This is not limited to the alternatives to the CM4. There are an array of alternatives to the RPi family, like the boards made by Pine64, or Libre, or Hardkernel's Odroid series. These all cannot run the official Raspberry Pi OS.

Jeff Geerling does a fantastic job of reviewing the RPi CM4. I am not going to give a complete, indepth review; Jeff has already done that.

Core Features:

- Optional eMMC, zero to 32GB

- Optional Wireless (WiFi and Bluetooth)

- Variety of memory sizes, 1GB to 8GB

If by some chance you stumbled onto this post and you need assistance in getting Raspberry Pi OS running on a CM4 unit, check out this. I'm not going to go into details here; it is a solved problem.

Many of the alternatives to Raspberry Pi OS have a very similar feel and shallow curve for learning and setting up, but they are not 100% the same. Take for example, the the multi-board Linux distribution Armbian. Armbian supports over 160 different single board computers. If you have a well established board, there is a good chance there's an Armbian build for it. Armbian is very similar to RPi OS; they are both derivatives of Debian, both can use standard Ubuntu and Debian packages, both have a similar method of writing a disk image to an SD card and booting the OS. There is no guarantee, however, that all software designed for the Raspberry Pi OS will run under Armbian. Particularly when dealing with third party shields and GPIO boards as well as things that I tend to ignore like video encoding/decoding and sound.

The common quip as of late goes something like this: because of the shortage of Raspberry Pi computers, some people have turned to alternatives. This might be the case for some, but this is not going to be my justification for using or testing out the three alternatives that will be present throughout the rest of this article.

All Raspberry Pi single board computers and modules are in tight supply for the retail and hobbists markets. Check out Raspberry Pi Locator for places that might have supply. If you are willing to pay a significant premium, eBay has quite a few available.

With the RPi CM4 having been covered extensively - like Jeff Geerling's Review; instead, I'll be looking at the remaining three modules.

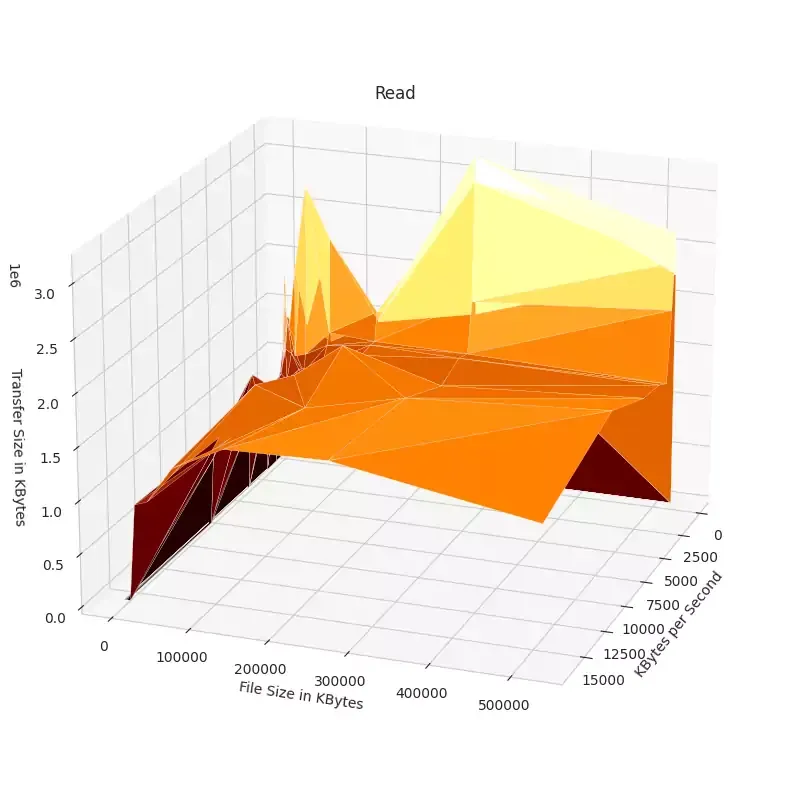

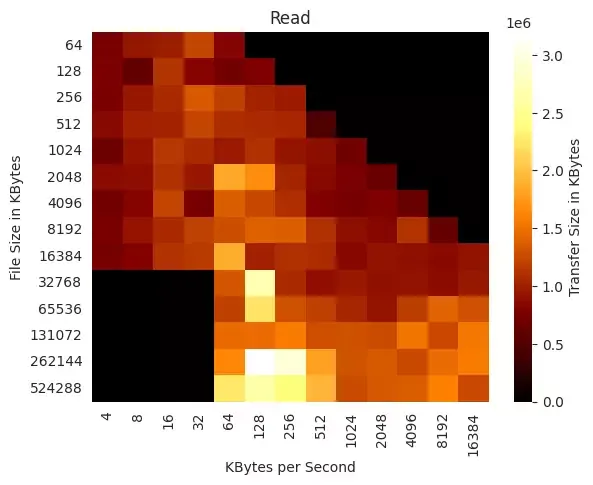

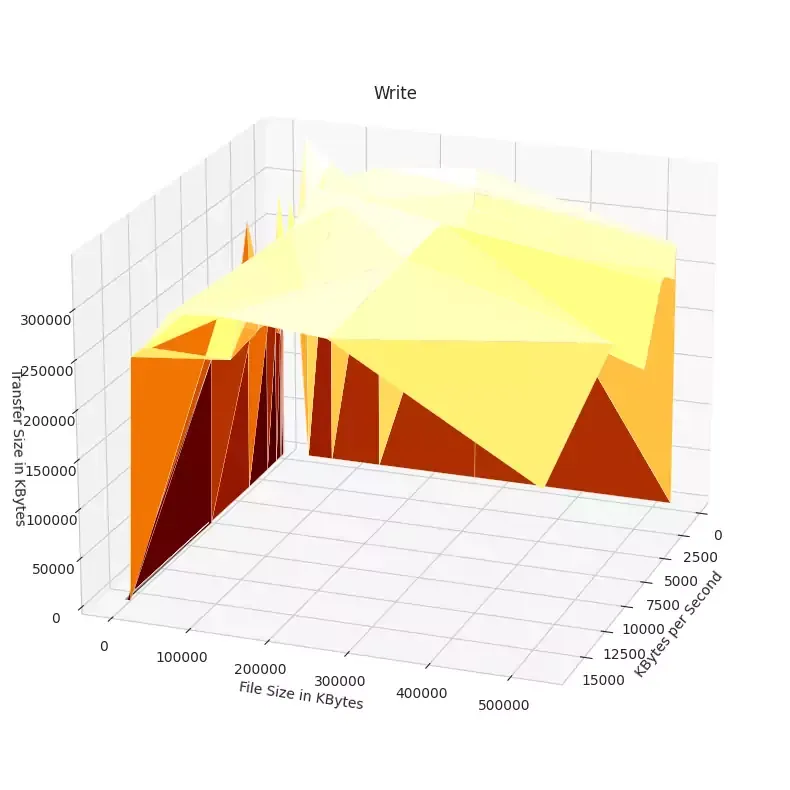

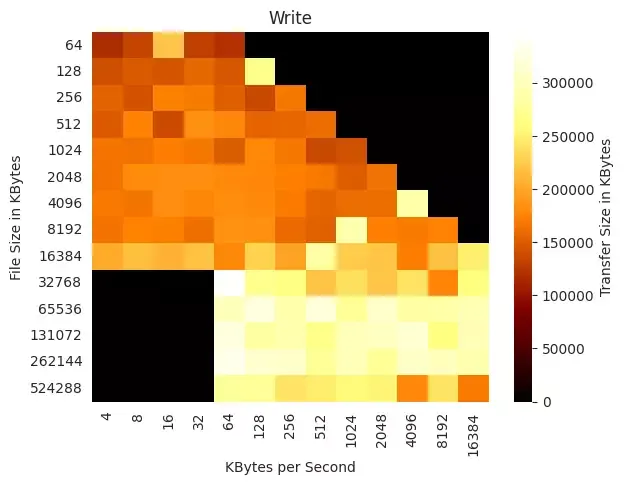

Performance Metrics

|

Features Comparison

|

|

Raspberry Pi CM4 |

Radxa CM3 |

Pine64 SOQuartz |

Banana Pi CM |

|

Specifications |

Specifications |

Specifications |

Specifications |

| Core |

Broadcom BCM2711, Quad core Cortex-A72 (ARM v8) 64-bit SoC @ 1.5GHz |

Rockchip RK3566, Quad core Cortex-A55 (ARM v8) 64-bit SoC @ 2.0GHz |

Rockchip RK3566, Quad core Cortex-A55 (ARM v8) 64-bit SoC @ 1.8GHz and Embedded 32-bit RISC-V CPU |

Amlogic A311D Quad core ARM Cortex-A73 and dual core ARM Cortex-A53 CPU |

| NPU |

- |

0.8T NPU |

0.8 TOPS Neural Network Acceleration Engine |

5.0 TOPS |

| GPU |

- |

Mali G52 GPU |

Mali-G52 2EE Bifrost GPU |

Arm Mali-G52 MP4 (6EE) GPU |

| Memory |

1GB, 2GB, 4GB or 8GB LPDDR4 |

1GB, 2GB, 4GB or 8GB LPDDR4 |

2GB, 4GB, 8GB LPDDR4 |

4GB LPDDR4 |

| eMMC |

On module - 0GB to 32GB |

On module - 0GB to 128GB |

External - 16GB to 128GB |

On module - 16GB to 128G) |

| Network |

1Gbit Ethernet - Option for WiFi5 with Bluetooth 5.0 |

1Gbit Ethernet - Option for WiFi5 with Bluetooth 5.0 |

1Gbit Ethernet - WiFi 802.11 b/g/n/ac with Bluetooth 5.0 |

1Gbit Ethernet |

| PCIe |

1-lane |

1-lane |

1-lane |

1-lane |

| HDMI |

2x HDMI |

1x HDMI |

1x HDMI |

1x HDMI |

| GPIO |

28 pin |

40 pin |

28 pin |

26 pin |

| Extras |

- |

- |

- |

SATA ports, one shared with USB 3, one shared with PCIe; Audio Codec |

| Geekbench Score - Single CPU |

228 |

163 |

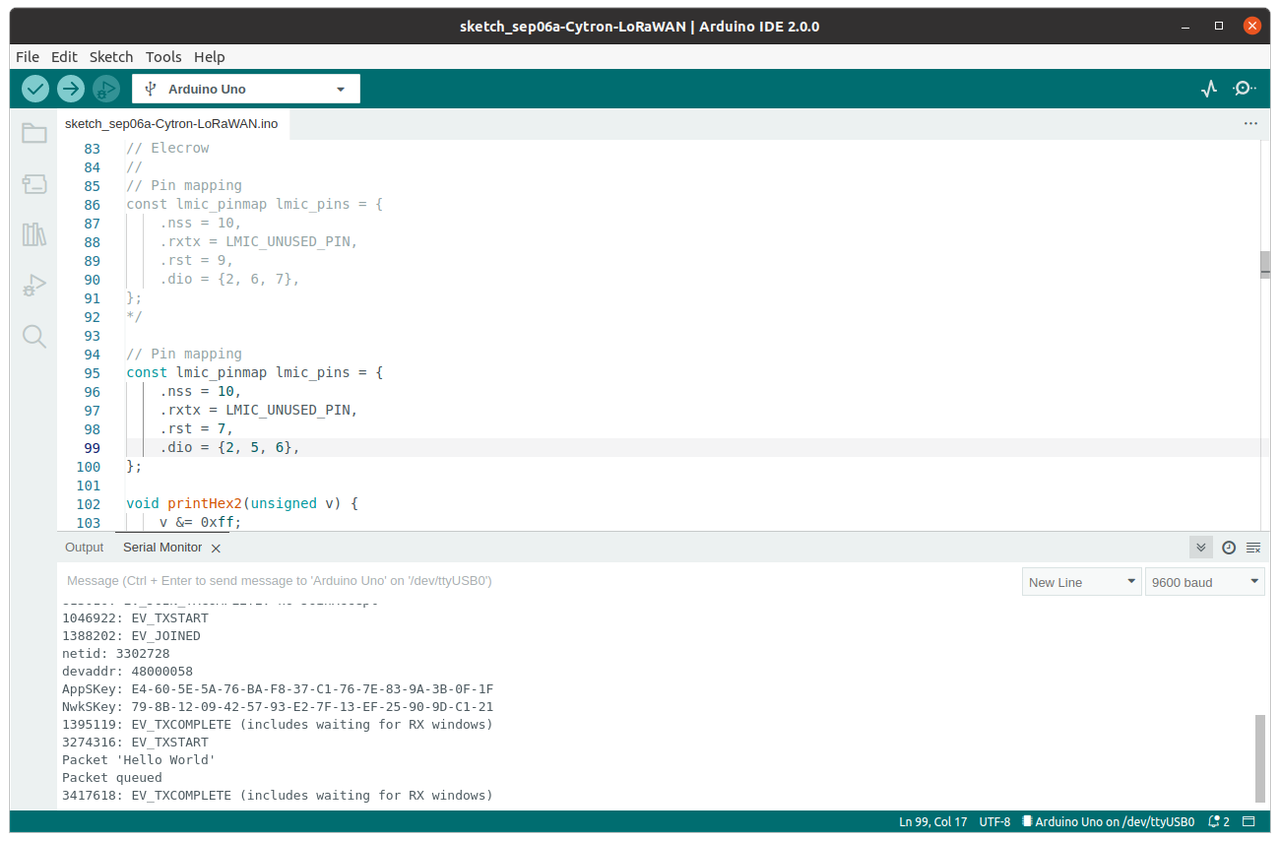

156 |

295 |

| Geekbench Score - Multi CPU |

644 |

508 |

491 |

1087 |

| Price of Tested* |

$65 |

$69 |

$49 |

$105 |

| Power Consumption |

7 watts |

N/A |

2 watts |

N/A |

* Prices exclude shipping

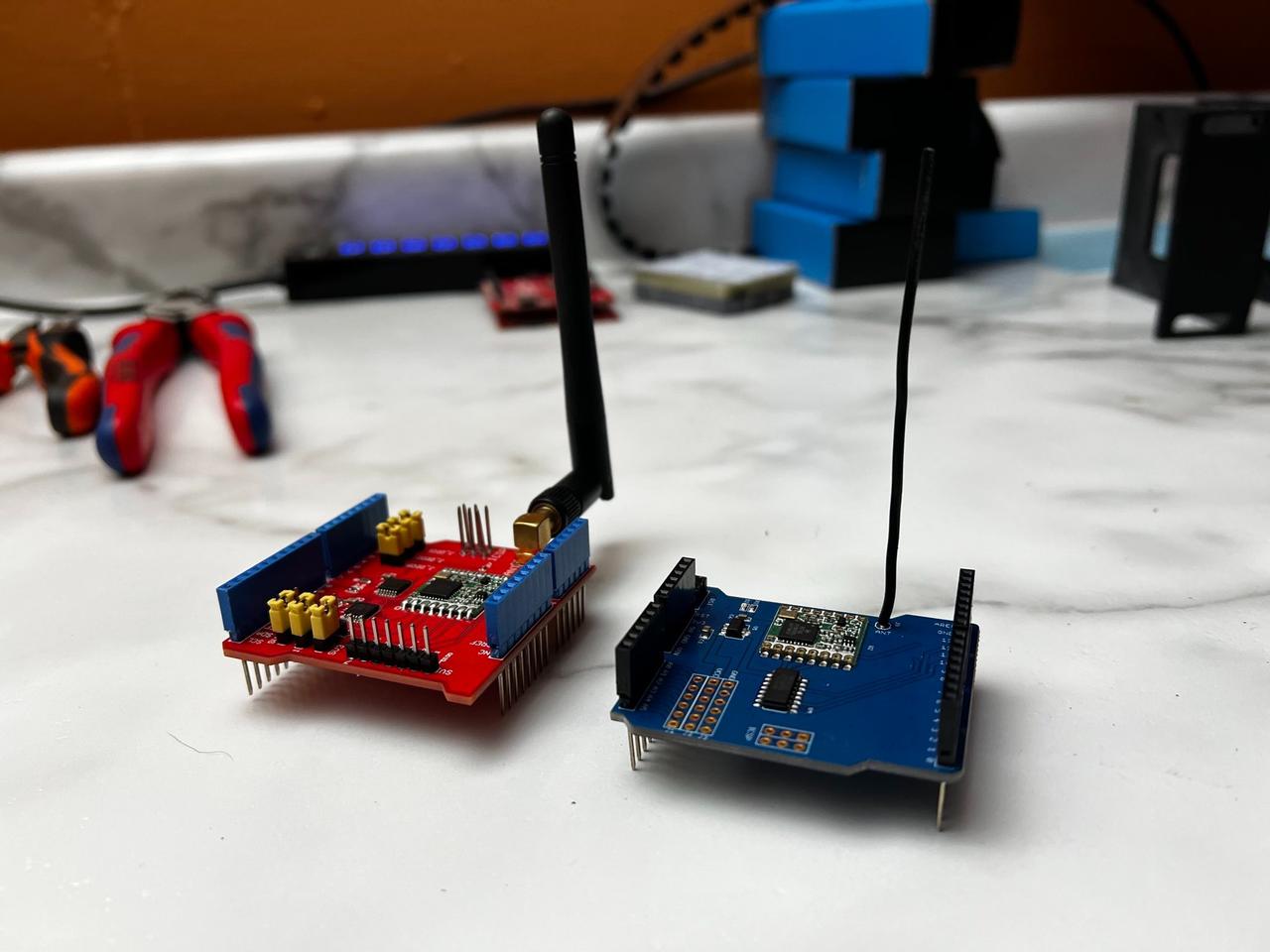

Pine64 SOQuartz

Pine64 SOQuartz Module, 4GB memory

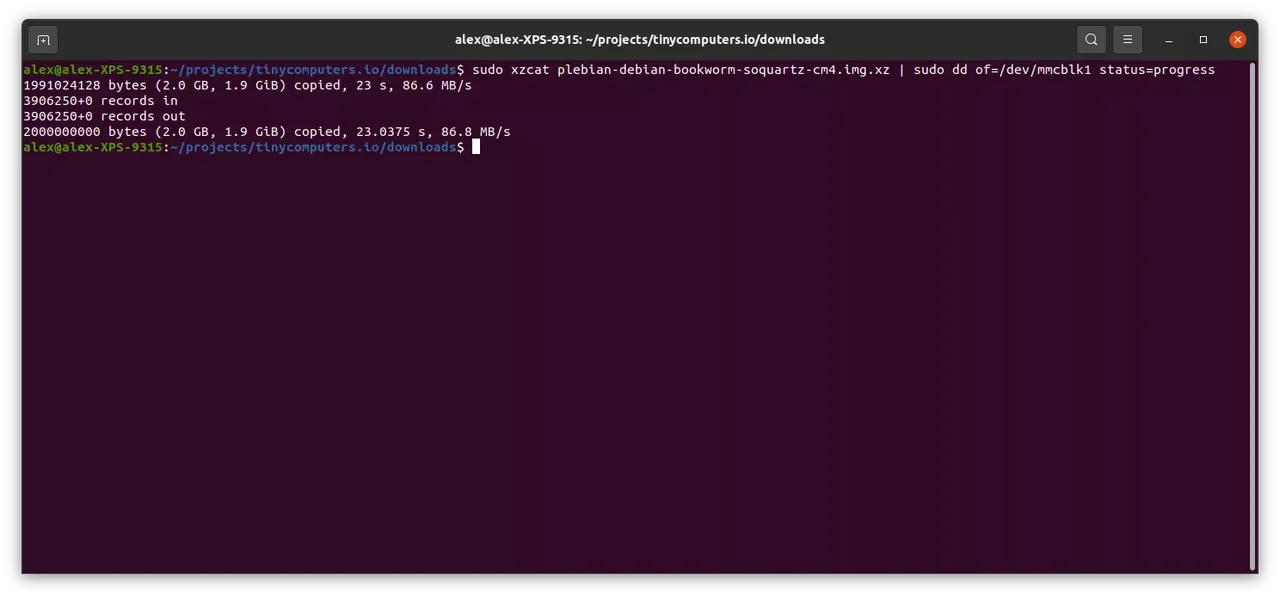

For whatever reason, I really like Pine64's SOQuartz module. It is by far the least performant of the four compute modules I have tried. It has a wonky antenna and needs a far from mainstream variety of Linux to be useful. There are two Linux distributions available: DietPI and Plebian Linux. I settled upon using Plebian. I would have gone with DietPi but my initial use case of making a two ethernet router using a Waveshare Dual Gigabit Ethernet Base Board Designed for Raspberry Pi Compute Module 4, but I was unable to get both ethernet ports working. Plebian was simpler. For those interested in trying Plebian, you can download recent disk images by going to Plebian Linux's Github Actions, and select one of the recent "Build Quartz64 Images"; at the bottom there will be zipped disk image Artifacts to download for the various flavors of Quartz64. Plebian is a bit rough around the edges. It is derived from Debian Testing (currently codenamed bookworm) and runs a release candidate Linux Kernel. Its developer, CounterPillow, also states that "This is a work-in-progress project. Things don't work yet. Please do not flash these images yet unless you are trying to help develop this pipeline." The interactions with the system feel similar to that of NetBSD from the early-2010s. It is not to say it is not a modern flavor of Linux, it is simply lacking some of the usual expectations. You want your network interfaces to be named eth0? How about no. Interfaces have not been aliased, if you can get WiFi drivers working, you will end up with a device named something like wlxe84e069541e6 instead of wlan0. Given that it is running a testing branch of Debian, things like docker and the like will likely not work without some significant wrangling.

Why do I like this compute module? I like Pine64's products. I like the community that has grown up around the products. In the course of trying to get an operating system up and running, I had numerous questions that I asked on Pine64's Discord Server. Everyone was extremely helpful and despite my own feelings that some of my questions were simplistic, no one expressed that sentiment. There were no massive egos to speak of.

Core Features:

- Variety of memory options: 2gb to 8gb

- external eMMC module support: 8gb to 128gb

- Wifi

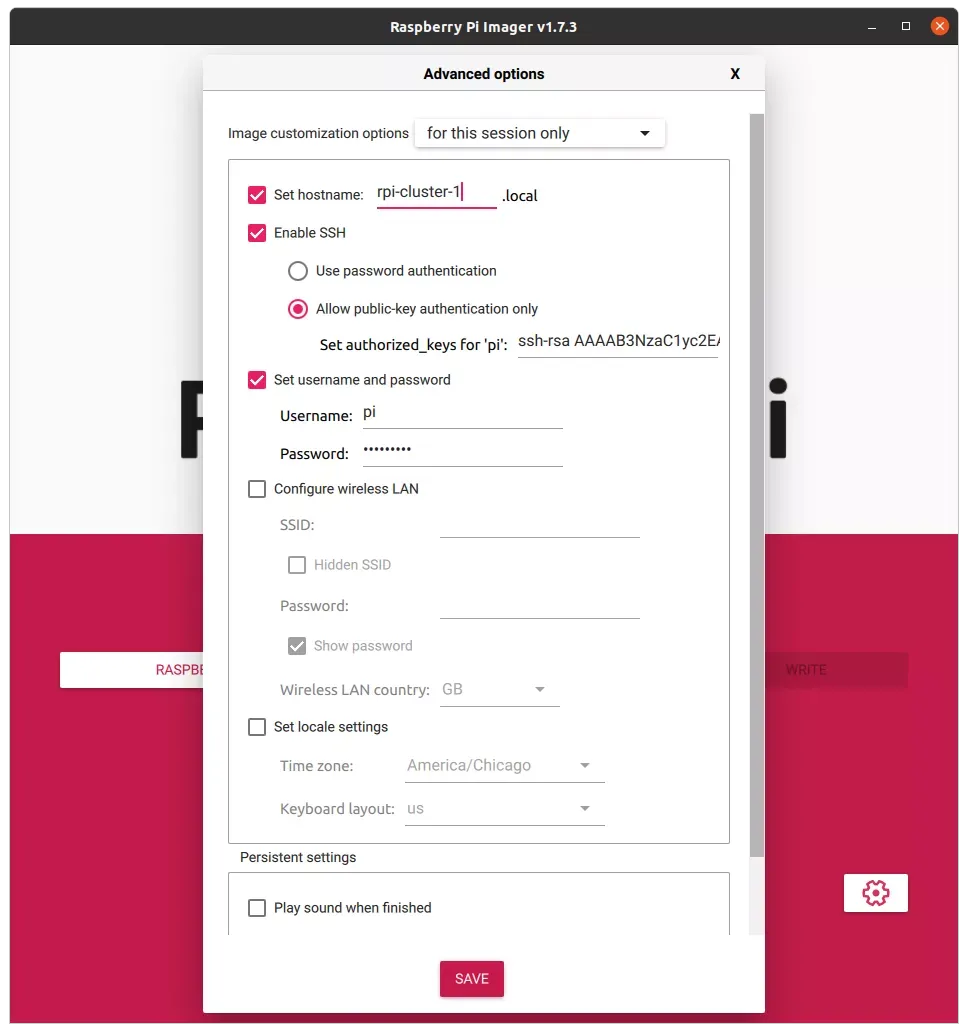

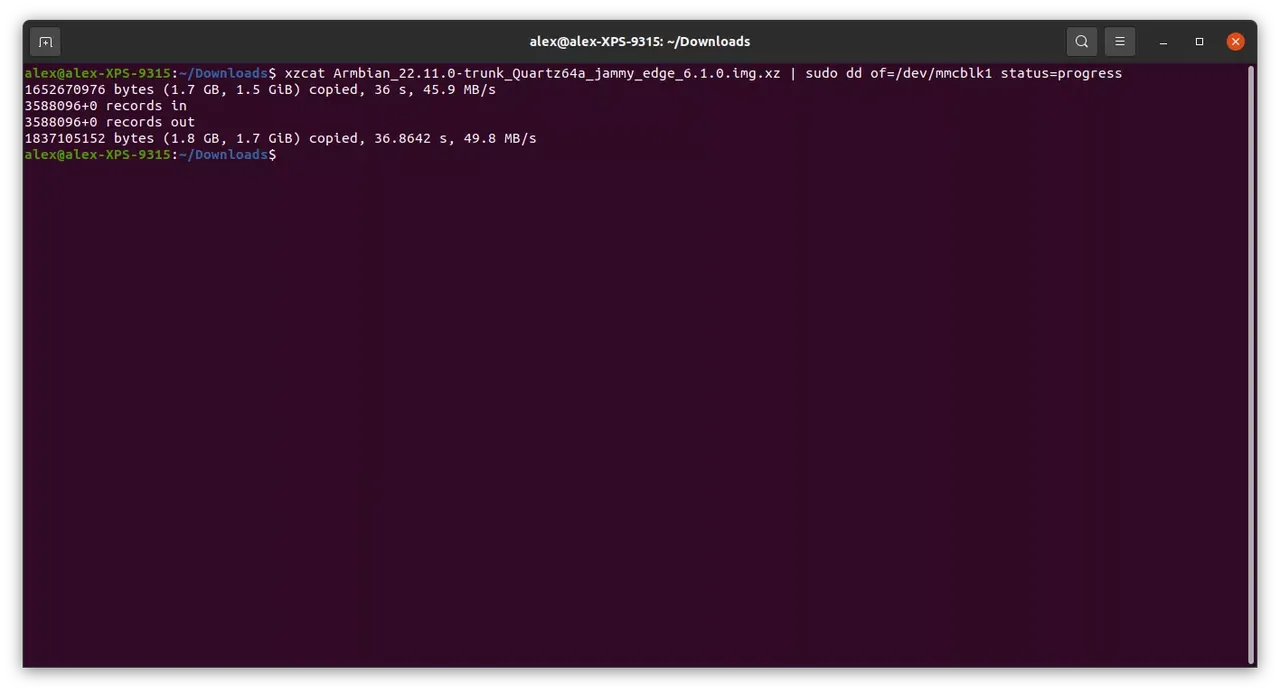

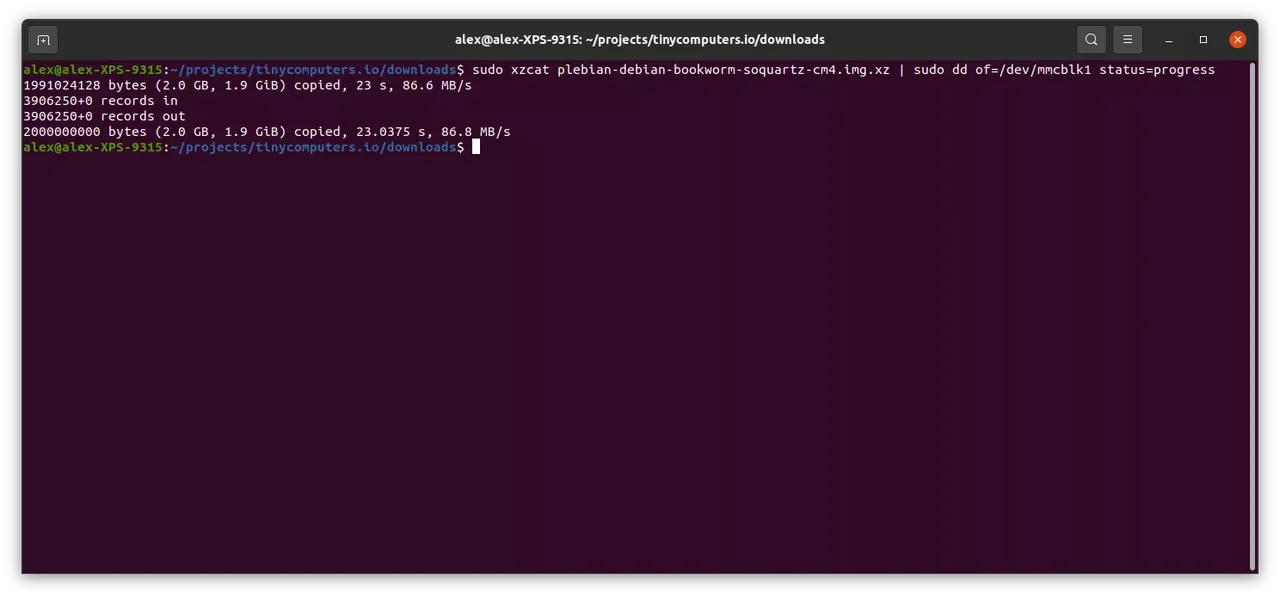

Getting Plebian running on a SOQuartz module is straightforward; write the appropriate image to an eMMC module, attach the eMMC onto the SOQuartz and place it into a carrier or IO board. You should get working HDMI, one ethernet port, along with USB working. A quick run down of the steps are as follows:

-

Get a USB to eMMC adapter; Pine64 has one available; you could also try eBay; you may need to get a micro SD to USB adapter, too.

-

Get an eMMC module. Pine64 has a few available

-

Obvious step: connect your eMMC to your USB to eMMC adapter and then connect that to your desktop/laptop/etc.

-

Download a SOQuartz Plebian Linux disk image from Plebian Linux's Github Actions

-

DownloadSOQuartz CM4 IO Board Image

- Unzip the contents

-

You will end up with a file called plebian-debian-bookworm-soquartz-cm4.img.xz;

-

Write to your eMMC module. You could use something balena Etcher or, if you're command-line-comfortable, use dd

-

balena Etcher will take care of decompressing plebian-debian-bookworm-soquartz-cm4.img.xz

-

using dd, you can do something like this:

bash

sudo xzcat plebian-debian-bookworm-soquartz-cm4.img.xz | sudo dd of=/dev/mmcblk1 status=progress

where /dev/mmcblk1 is the correct device for your USB to eMMC adapter.

-

Attach your eMMC module to your SOQuartz module and attach the module to an IO or carrier board.

-

Attach peripherals and apply power. You'll eventually get presented with a prompt to set the password for the user pleb

If would be more cost effective if you were to buy a SOQuartz module, an USB to eMMC adapter and an eMMC module all at once; for orders being shipped to the United States, it is roughly a $9 flat rate.

Finally, if you really feel like going for an alternative to Linux, NetBSD will also work on the SOQuartz, but it is more complicated. You will need to download a Generic 64bit image from under the NetBSD-daily HEAD tab. This will need to be written to an eMMC module. Next, you will need to write the appropriate UEFI image to an SD card from Jared McNeill's port of Tianocore to the Quartz64 family. UEFI and the disk image cannot exist on the same media.

Pine64 sells SOQuartz modules directly from their site. The modules I have purchased and used are the 4gb models. They are about $50 excluding shipping.

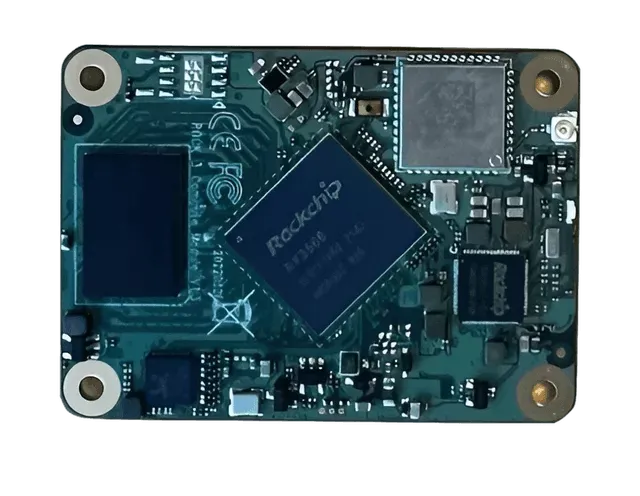

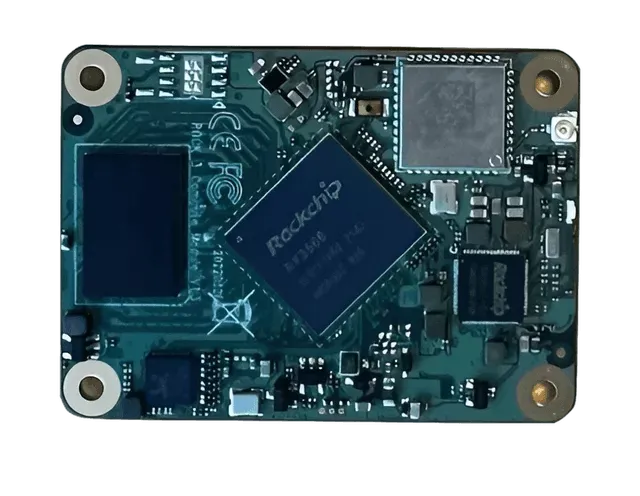

Radxa CM3

Radxa CM3, 4GB memory, without heatsinks

As far as performance goes, the Radxa CM3 is just above Pine64's SOQuartz module but below Raspberry Pi CM4. Radxa is better known for its Rock3 and Rock5 series of single board computers; available from ALLNET.China and eBay. The CM3 is in the Rock3 series of boards and modules. The series features Rockchip RK3566/RK3568 processors, the RK3566 also is used in Pine64's Quartz64 and SOQuartz boards. Even though the module will function without issue on a carrier or IO board designed for Raspberry Pi CM4, the CM3 IO board by Radxa exposes two SATA ports in addition to the PCIe 1x lane. The CM3 has an official Debian and Ubuntu distributions, but like all the other compute modules, these are artisanally crafted specifically for the CM3. That means, you can not take an actual-official Debian or Ubuntu disk image for Arm64 and have it just have it work. Radxa does, however, maintain an up-to-date Github build pipeline for producing both Debian and Ubuntu images for the CM3. Like the operating system's need to be different, so is the eMMC - it is not flashed in a typical manner - like what you would expect from a Raspberry Pi CM4 or even the Pine64 SOQuartz. In order to install Linux on the onboard eMMC, you need to use tools provided by Rockchip. The Radxa Wiki page for CM3 is a good place to start. The CM3 and its installation process are about as far from Raspberry Pi CM4 territory as you will deal with for the modules presented in this article. The following instructions are available from wiki.radxa.com but they are found across a disparate set of pages; some describing the rockchip tools with references to disk images but with no clear and convenient place to download the files. This is an attempt to streamline the process. Let's get at it!

Core Features:

- On-module eMMC of 16 to 128GB

- Two SATA (when using the appropriate IO board)

The Rockchip tools are available on Windows as well as macOS/Linux. Downloading, compiling and running the macOS/Linux tool is straightforward; Windows involves a set of drivers and an executable tool.

Linux/macOS

- Install necessary USB and

autoconf packages

sudo apt-get install libudev-dev libusb-1.0-0-dev dh-autoreconf pkg-config libusb-1.0

git clone https://github.com/rockchip-linux/rkdeveloptool

cd rkdeveloptool

autoreconf -i

./configure

make

sudo make install

- Run

rkdeveloptool --helpto verify it is installed

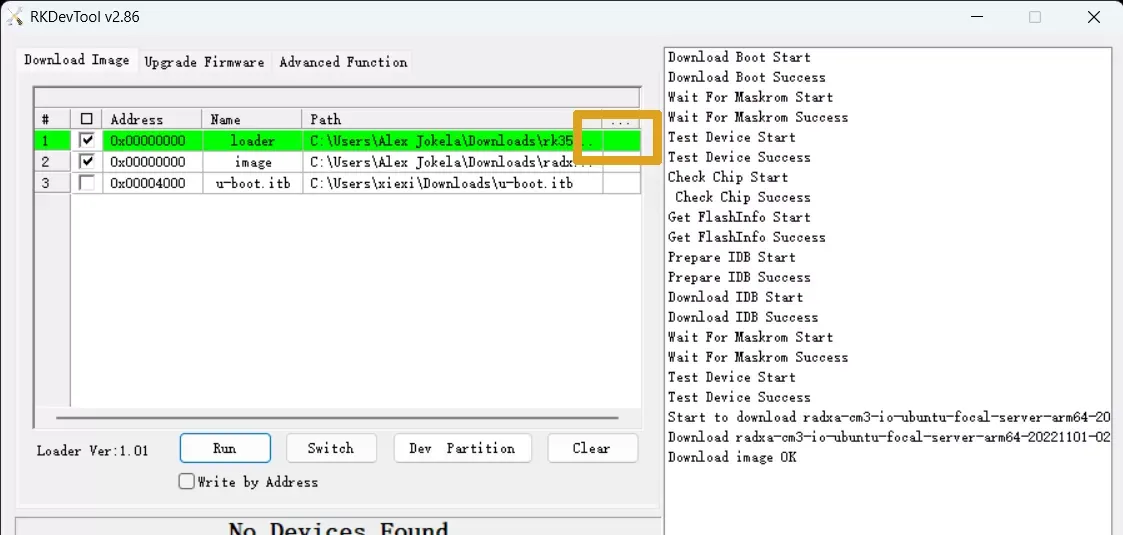

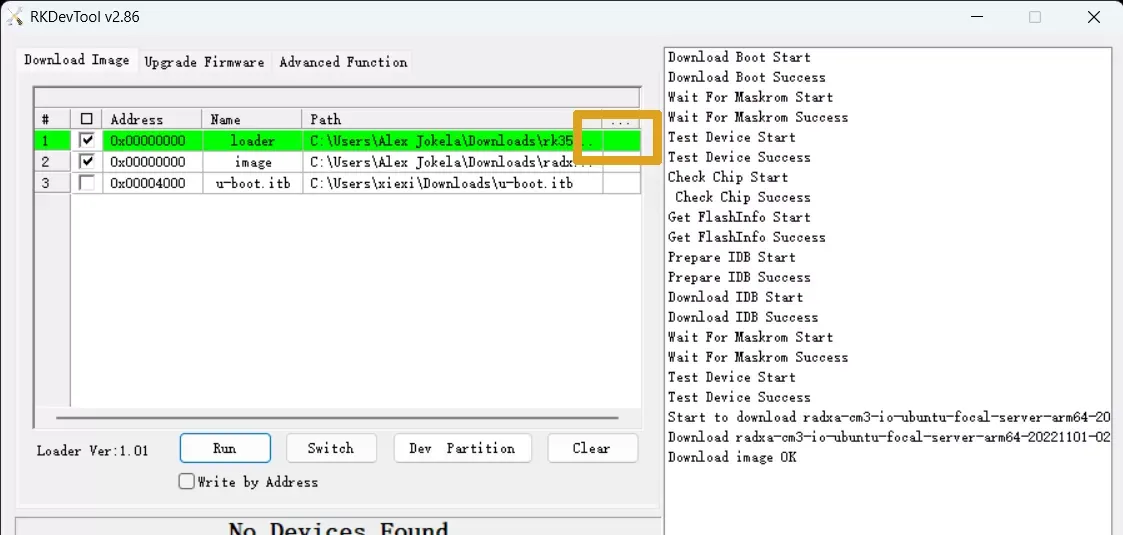

Windows

- Download RKDevTool

- Download RKDriverAssistant

- Unzip and execute RKDriverAssistant (

DriverInstall.exe)

- Unzip RKDevTool

- Before executing the tool, you will want to change the language to English; change

Chinese.ini to Englist.ini

There is an assumption of using a Raspberry Pi CM4 IO Board.

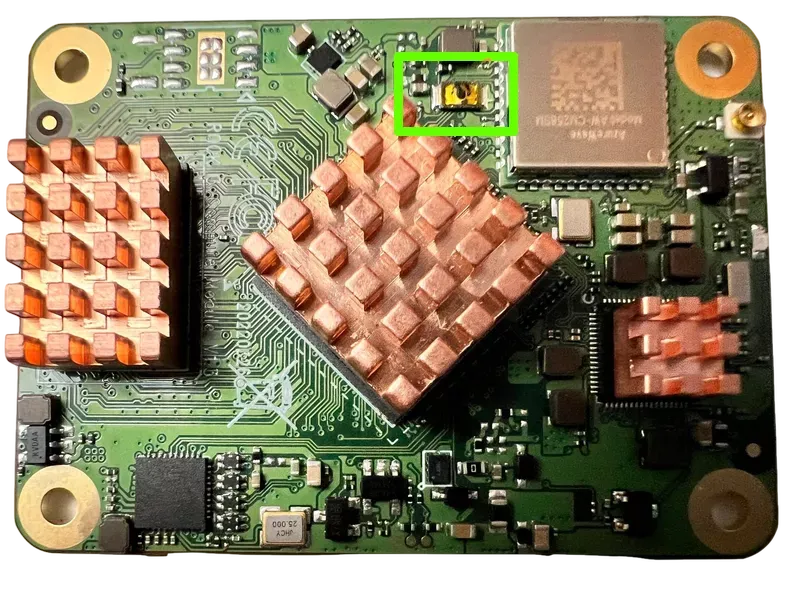

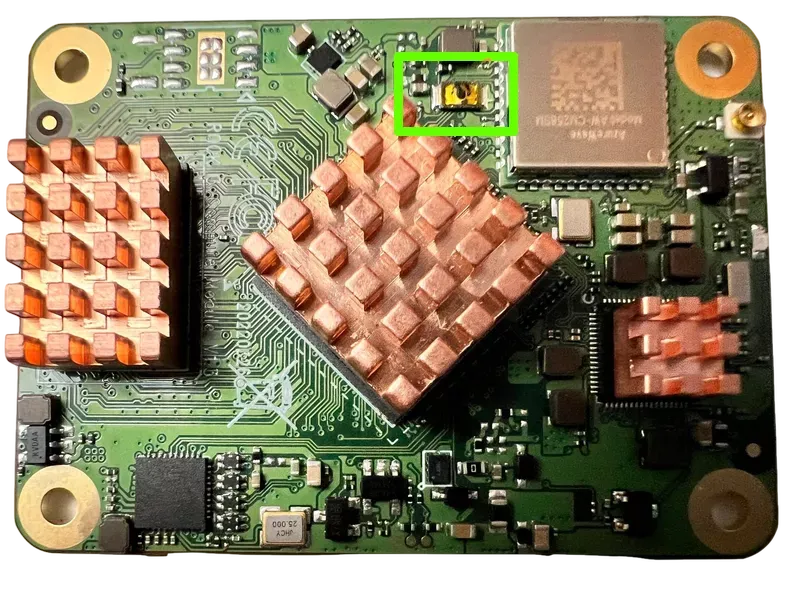

Boot into maskrom mode

- Unplug the board and remove any SD card

- Plug a micro USB to USB Type-A cable into the micro USB port on the IO board. The other end of the cable gets plugged into your desktop or laptop. My laptop only has USB-C, so I had to use an adapter

- On the CM3, there is a very tiny golden button; while pressing this, plug the power back in on the IO board

- After a few seconds, you can stop pressing the button

- Check for a USB device

- Linux/macOS should show

Bus 001 Device 112: ID 2207:350a Fuzhou Rockchip Electronics Company

- Windows, you will need to run

RKDevTool; the status at the bottom of the application should read maskrom mode

maskrom button

Flashing/Writing a Disk Image

You will need to download two files:

- rk356x_spl_loader_ddr1056_v1.06.110.bin

- A Radax CM3 disk image from https://wiki.radxa.com/Rock3/downloads or https://github.com/radxa-build/radxa-cm3-io/releases/latest or this mirror

We will be using radxa-cm3-io-ubuntu-focal-server-arm64-20221101-0254-gpt.img.xz; it is advisable to follow this and download a more recent disk image.

Linux Flashing

DevNo=1 Vid=0x2207,Pid=0x350a,LocationID=104 Maskrom

rkdeveloptool db rk356x_spl_loader_ddr1056_v1.06.110.bin

rkdeveloptool wl 0 radxa-cm3-io-ubuntu-focal-server-arm64-20221101-0254-gpt.img.xz

Reboot CM3

Windows Flashing

You will need to specify a loader as well as an image. In the table on the left side of the screenshot, click in right-most the rectangle of the first row. This should bring up a file dialog box. Navigate to where you downloaded rk356x_spl_loader_ddr1056_v1.06.110.bin. Likewise for the second row (image), navigate to where you downloaded radxa-cm3-io-ubuntu-focal-server-arm64-20221101-0254-gpt.img.xz

Click Run

This operation will take several minutes; be patient.

The CM3 should automatically boot and bring you to a login prompt. The default user is rock with a password of rock

The Radxa CM3 can be purchased from ALLNET.China for about $70 excluding shipping.

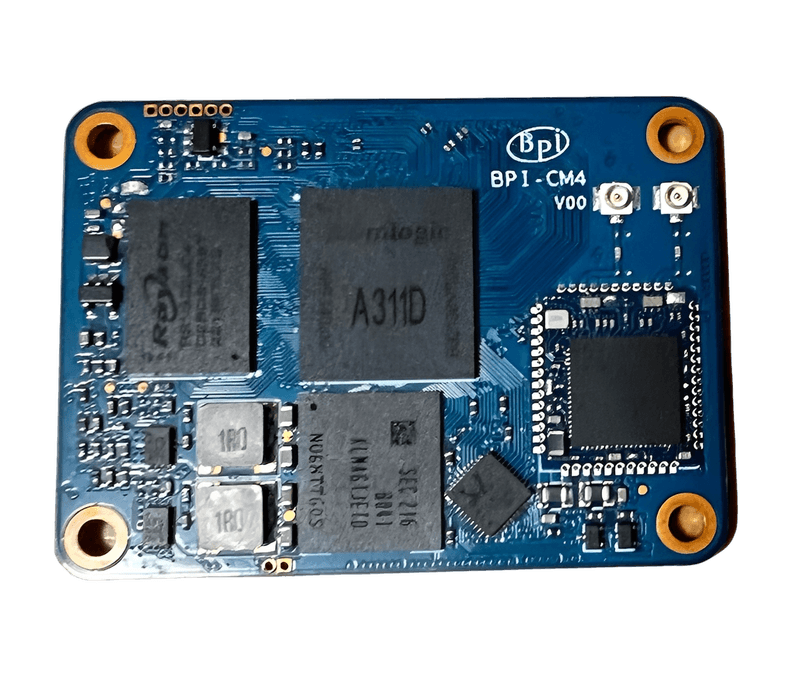

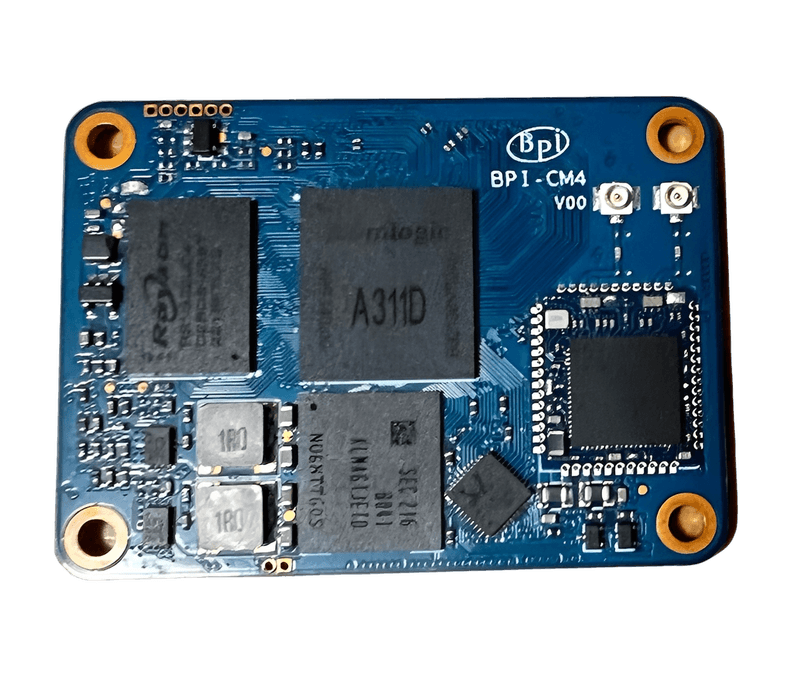

Banana Pi CM4

Banana Pi CM4, 4GB memory

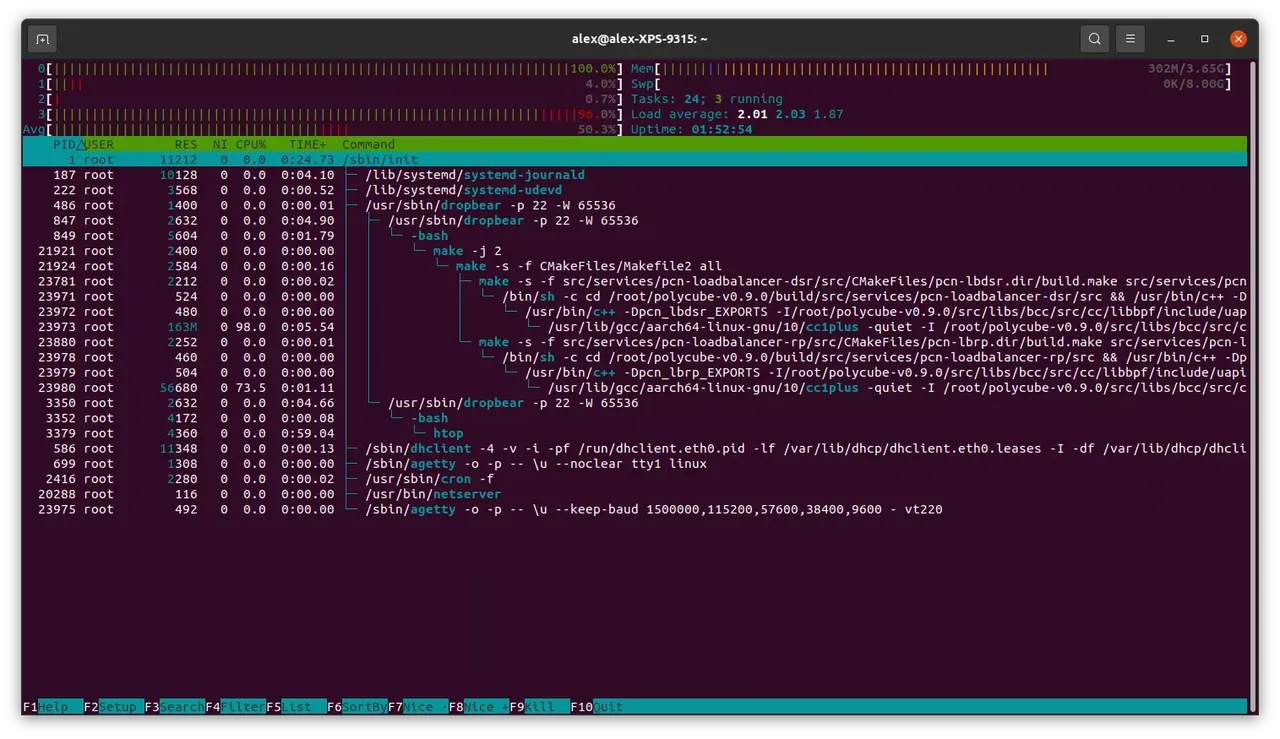

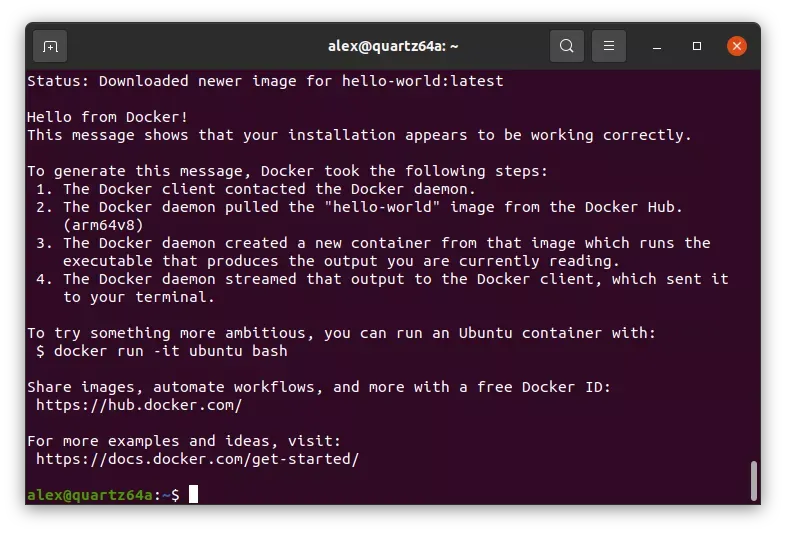

Looking at the Geekbench table (above), you will notice at the Banana Pi CM4 seriously outperforms the other three modules I have tested. It is also the most expensive module - including shipping - it was about $120. This was not an inflated Raspberry Pi price, this is directly from Sinovoip, the company behind the Banana Pi family of single board computers. But, before you start searching for where you can buy one, as of the time of this writing, I purchased Sinovoip's last module that they had allocated to developers and testers; and they have not started to commercially produce any, yet.

Core Features:

Like the Radxa CM3, operating system software is very limited. For very detail instructions on install an operating system, in this case Android, check out https://wiki.banana-pi.org/Getting_Started_with_CM4.

Installing and boot Linux is fairly straight forward. You can either boot from an SD card, or you can choose to boot from the on-board eMMC module. That said, nonetheless, you will need an SD card.

Head over to https://wiki.banana-pi.org/Banana_Pi_BPI-M2S#Linux, and you find a similar table of distributions images:

Distributions

Ubuntu

- 2022-06-20-ubuntu-20.04-mate-desktop-bpi-m2s-aarch64-sd-emmc.img.zip

Baidu Cloud: https://pan.baidu.com/s/1kRukI-H-xliNqIqVacXWRw?pwd=8888 (pincode:8888)

Google drive: https://drive.google.com/file/d/1P2YQUwdrREdiwidr8YtCvOdMmwLPerVu/view

S3 Mirror: https://s3.us-east-1.amazonaws.com/cdn.tinycomputers.io/banana-pi-m2s-cm4-linux/2022-06-20-ubuntu-20.04-mate-desktop-bpi-m2s-aarch64-sd-emmc.img.zip

MD5:2945f225eadba1b350cd49f47817c0cd

- 2022-06-20-ubuntu-20.04-server-bpi-m2s-aarch64-sd-emmc.img.zip

Baidu Cloud:https://pan.baidu.com/s/1UoYR0k9YH9SE_A-MpqZ2fg?pwd=8888 (pincode: 8888)

Google Drive:https://drive.google.com/file/d/1y0DUVDhLyhw_C7p6SD2q1EjOZLEV_c_w/view

S3 Mirror: https://s3.us-east-1.amazonaws.com/cdn.tinycomputers.io/banana-pi-m2s-cm4-linux/2022-06-20-ubuntu-20.04-server-bpi-m2s-aarch64-sd-emmc.img.zip

MD5:9b17a00cbc17c46e414a906e659e7ca2

Debian

- 2022-06-20-debian-10-buster-bpi-m2s-aarch64-sd-emmc.img.zip

Baidu Cloud: https://pan.baidu.com/s/1TTsdyy5I7HLWS_Tptg7r2w?pwd=8888 (pincode: 8888)

Google Drive:https://drive.google.com/file/d/116ZydpggYpZ1WoSyVsc4QuchdIa3vGyI/view

S3 Mirror: https://s3.us-east-1.amazonaws.com/cdn.tinycomputers.io/banana-pi-m2s-cm4-linux/2022-06-20-debian-10-buster-bpi-m2s-aarch64-sd-emmc.img.zip

MD5:9d39558ad37e5da47d7d144c8afec45e

Flashing/Writing Images

Let's assume we are using 2022-06-20-debian-10-buster-bpi-m2s-aarch64-sd-emmc.img.zip; the handiest thing to start out with is making a bootable sd card. On your laptop or desktop computer, and assuming you are using a flavor Linux, issue the following at a command line:

unzip 2022-06-20-debian-10-buster-bpi-m2s-aarch64-sd-emmc.img.zip

dd if=2022-06-20-debian-10-buster-bpi-m2s-aarch64-sd-emmc.img of=/dev/sda0

Change sda0 to the appropriate device.

Instead the sd card into the IO board, and apply power to the board. That's it for booting from an SD card. In order to boot from eMMC, you will need to follow the above steps, but instead of downloading and writing the image from your laptop or desktop, you will be using the BPI CM4 instead. Download and unzip the image file:

unzip 2022-06-20-debian-10-buster-bpi-m2s-aarch64-sd-emmc.img.zip

dd if=2022-06-20-debian-10-buster-bpi-m2s-aarch64-sd-emmc.img of=/dev/mmcblk0

Now, power down the IO board, and remove the SD card. Apply power once more, and you should be booting up from eMMC.

As a side note, when I first booted my CM4, it began an unattended system update and that took a while to complete. It will be best if you let it finish this before doing any serious usage. Just use top to check on the running processes.

Other bits of information:

As of this writing, the Banana Pi CM4 is currently unavailable for general purchase.

Final Thoughts

If you are needing to operate in a familiar environment, you will want to go with the Raspberry Pi CM4. As of this writing, you will pay a premium - a 100% markup or more. You can get high priced CM4s from eBay or Amazon. If you need performance, and are not needing to use crazy shields and hats, you will want to go with the Banana Pi CM4, but the catch is, it has not been released yet. It is hands down the most robust compute module. If you are looking to use bleed-edge Linux and want a bit of a challenge, the Pine64 SOQuartz module is for you. And that leaves the Radxa CM3. If you willing to use the Rockchip tools to flash the eMMC module, and you are not concerned with software compatibility for shields and hats and you want similar performance to an RPi CM4, the Radxa might be a good choice.