The Orange Pi RV2: Cost-effective 8-core RISC-V development board

When the Orange Pi RV2 arrived for testing, it represented something fundamentally different from the dozens of ARM and x86 single board computers that have crossed my desk over the years. This wasn't just another Cortex-A76 board with slightly tweaked specifications or a new Intel Atom variant promising better performance-per-watt. The Orange Pi RV2, powered by the Ky(R) X1 processor, represents one of the first commercially available RISC-V single board computers aimed at the hobbyist and developer market. It's a glimpse into a future where processor architecture diversity might finally break the ARM-x86 duopoly that has dominated single board computing as of late.

But is RISC-V ready for prime time? Can it compete with the mature ARM ecosystem that powers everything from smartphones to supercomputers, or the x86 architecture that has dominated desktop and server computing for over four decades? I put the Orange Pi RV2 through the same rigorous benchmarking suite I use for all single board computers, comparing it directly against established platforms including the Raspberry Pi 5, Raspberry Pi Compute Module 5, Orange Pi 5 Max, and LattePanda IOTA. The results tell a fascinating story about where RISC-V stands today and where it might be heading.

What is RISC-V and Why Does it Matter?

Before diving into performance numbers, it's worth understanding what makes RISC-V different. Unlike ARM or x86, RISC-V is an open instruction set architecture. This means anyone can implement RISC-V processors without paying licensing fees or negotiating complex agreements with chip vendors. The specification is maintained by RISC-V International, a non-profit organization, and the core ISA is frozen and will never change.

This openness has led to an explosion of academic research and commercial implementations. Companies like SiFive, Alibaba, and now apparently Ky have developed RISC-V cores targeting everything from embedded microcontrollers to high-performance application processors. The promise is compelling: a truly open architecture that could democratize processor design and break vendor lock-in.

However, openness alone doesn't guarantee performance or ecosystem maturity. The RISC-V software ecosystem is still catching up to ARM and x86, with toolchains, operating systems, and applications at various stages of optimization. The Orange Pi RV2 gives us a real-world test of where this ecosystem stands in late 2024 and early 2025.

The Orange Pi RV2: Specifications and Setup

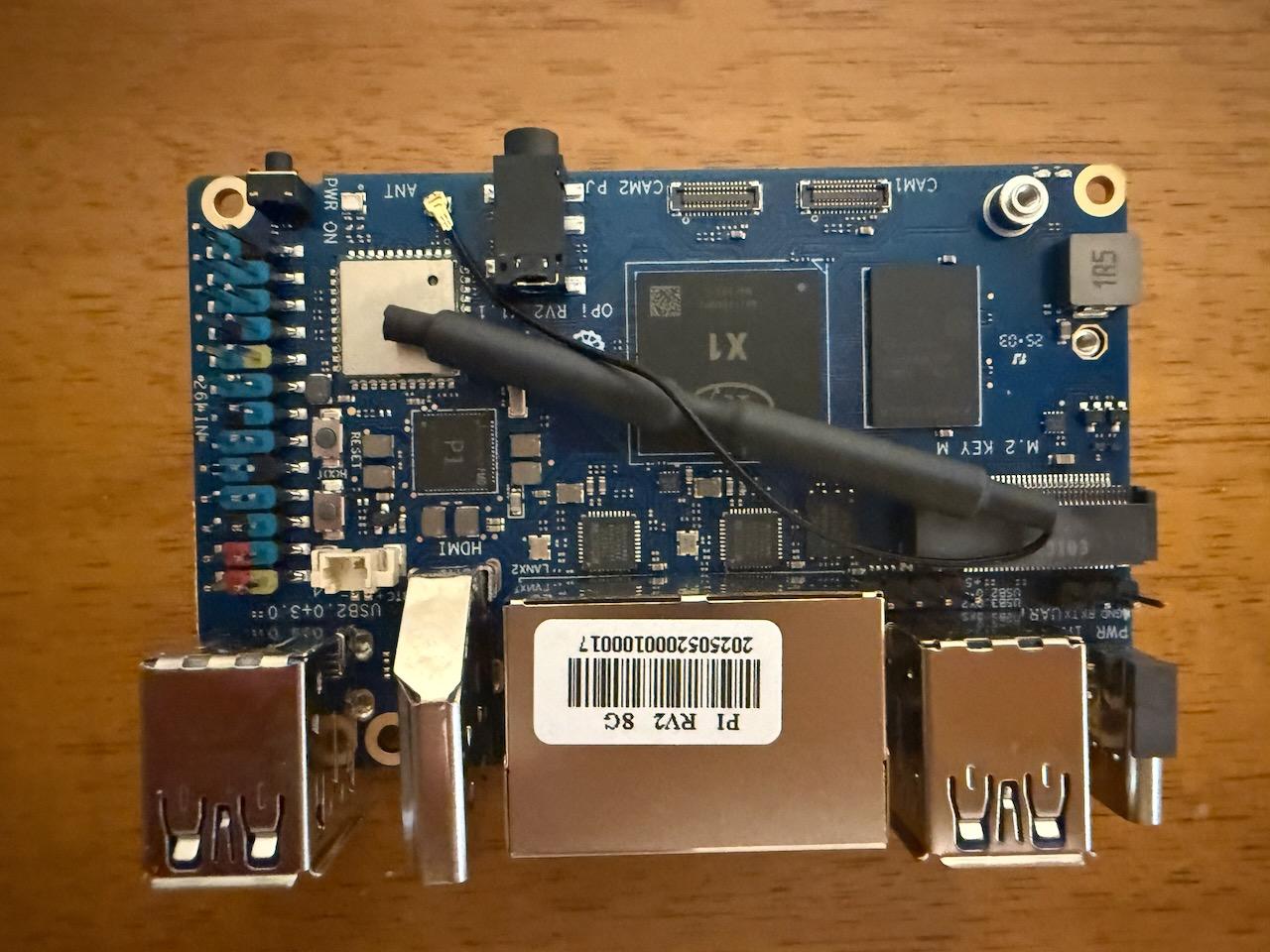

Top view showing the Ky X1 RISC-V processor and 8GB RAM

The Orange Pi RV2 features the Ky(R) X1 processor, an 8-core RISC-V chip running at up to 1.6 GHz. The system ships with Orange Pi's custom Linux distribution based on Ubuntu Noble, running kernel 6.6.63-ky. The board includes 8GB of RAM, sufficient for most development tasks and light server workloads.

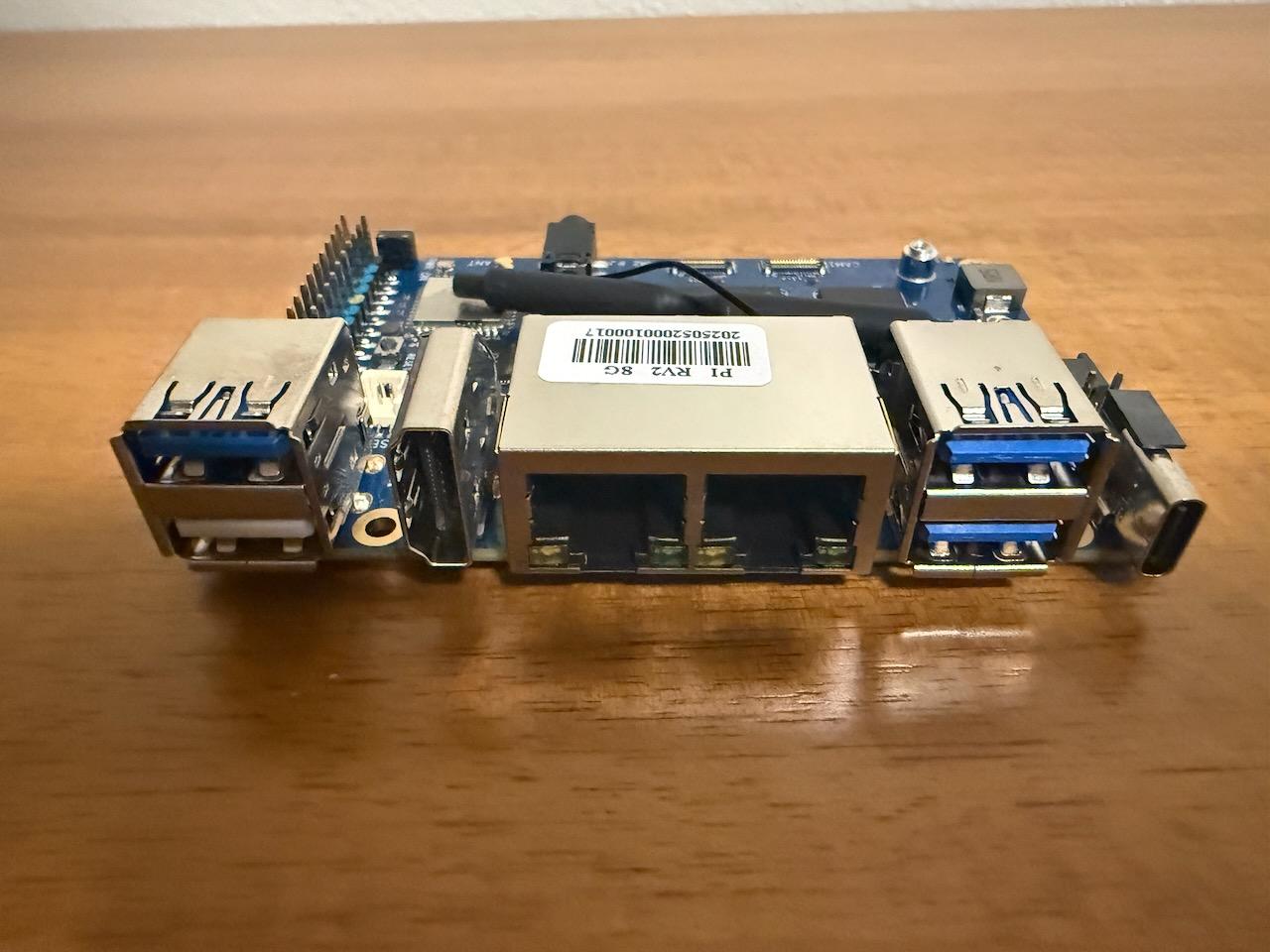

Side view showing USB 3.0 ports, Gigabit Ethernet, and HDMI connectivity

Setting up the Orange Pi RV2 proved straightforward. The board boots from SD card and includes SSH access out of the box. Installing Rust, the language I use for compilation benchmarks, required building from source rather than using rustup, as RISC-V support in rustup is still evolving. Once installed, I had rustc 1.90.0 and cargo 1.90.0 running successfully.

The system presents itself as:

Linux orangepirv2 6.6.63-ky #1.0.0 SMP PREEMPT Wed Mar 12 09:04:00 CST 2025 riscv64 riscv64 riscv64 GNU/Linux

One immediate observation: this kernel was compiled in March 2025, suggesting very recent development. This is typical of the RISC-V SBC space right now - these boards are so new that kernel and userspace support is being actively developed, sometimes just weeks or months before the hardware ships.

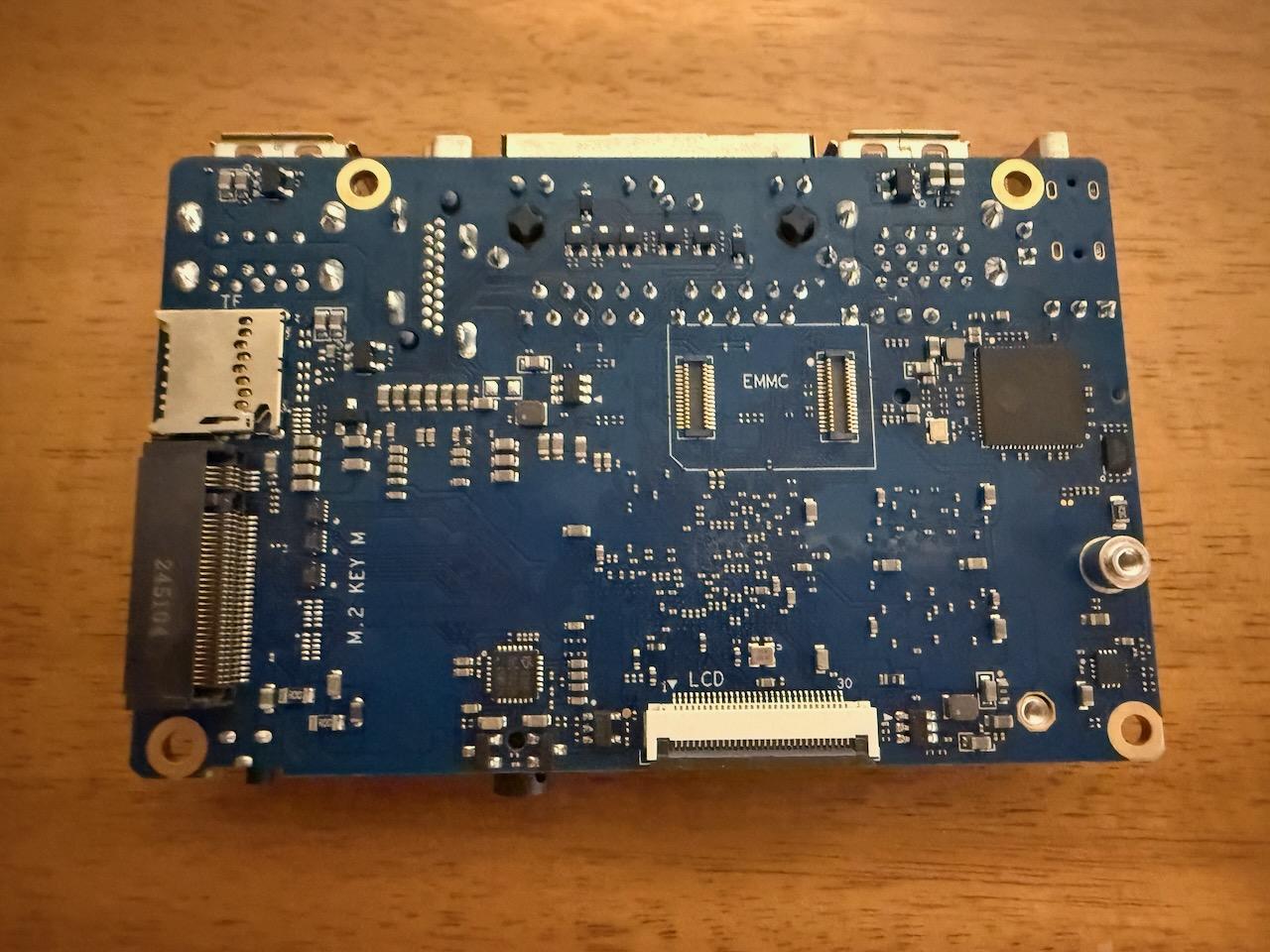

Bottom view showing eMMC connector and M.2 key expansion

The Competition: ARM64 and x86_64 Platforms

To properly evaluate the Orange Pi RV2, I compared it against four other single board computers representing the current state of ARM and x86 in this form factor.

The Raspberry Pi 5 and Raspberry Pi Compute Module 5 both feature the Broadcom BCM2712 with four Cortex-A76 cores running at 2.4 GHz. These represent the current flagship for the Raspberry Pi Foundation, widely regarded as the gold standard for hobbyist and education-focused SBCs. The standard Pi 5 averaged 76.65 seconds in compilation benchmarks, while the CM5 came in slightly faster, demonstrating the maturity of ARM's Cortex-A76 architecture.

The Orange Pi 5 Max takes a different approach with its Rockchip RK3588 SoC, featuring a big.LITTLE configuration with four Cortex-A76 cores and four Cortex-A55 efficiency cores, totaling eight cores. This heterogeneous architecture allows the system to balance performance and power consumption. In my testing, the Orange Pi 5 Max posted the fastest compilation times among the ARM platforms, leveraging all eight cores effectively.

On the x86 side, the LattePanda IOTA features Intel's N150 processor, a quad-core Alder Lake-N chip. This represents Intel's current low-power x86 offering, designed to compete directly with ARM in the SBC and mini-PC market. The N150 delivered solid performance with an average compilation time of 72.21 seconds, demonstrating that x86 can still compete in this space when properly optimized.

Compilation Performance: The Rust Test

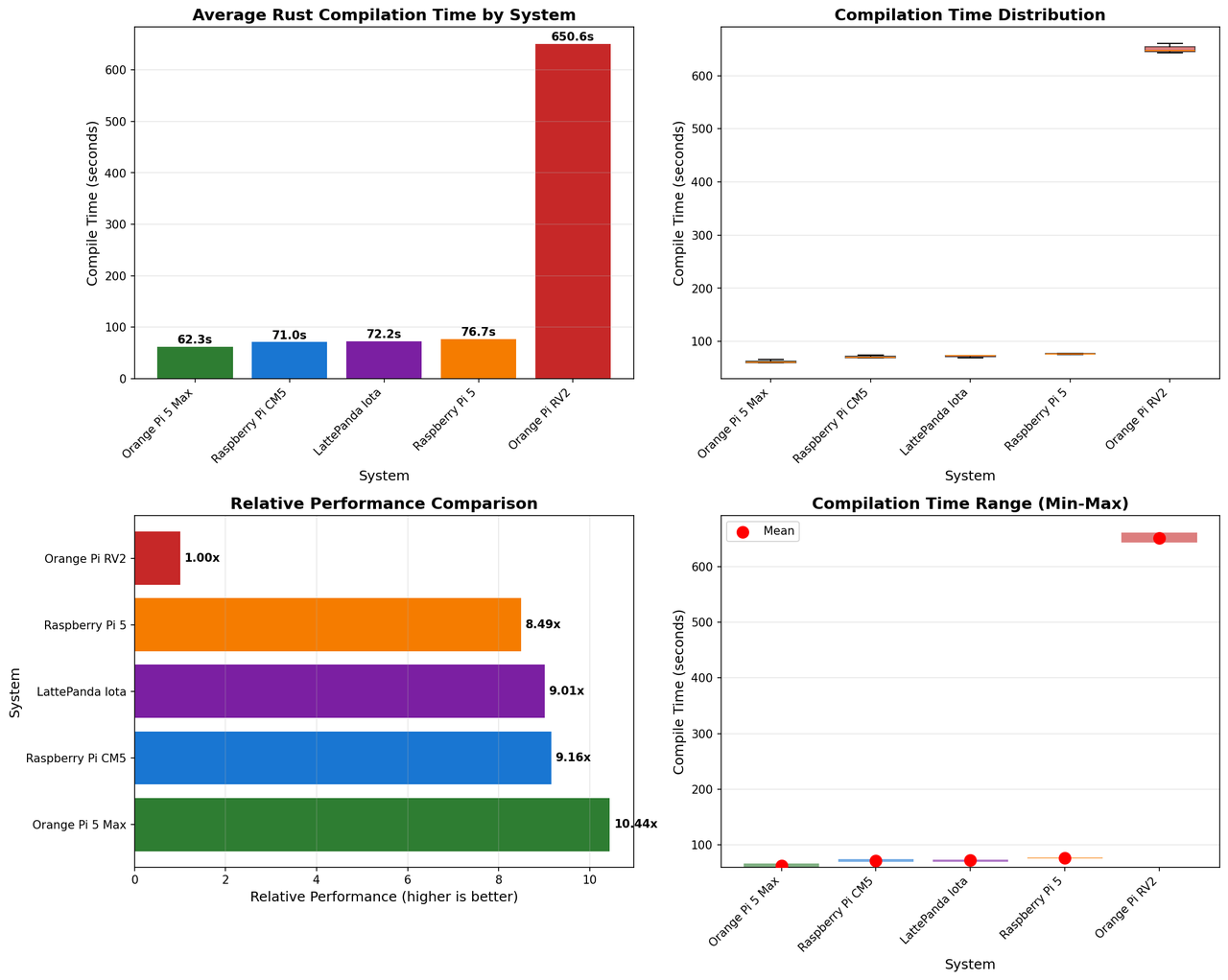

Comprehensive compilation performance comparison across all platforms

My primary benchmark involves compiling a Rust project - specifically, a ballistics engine with significant computational complexity and numerous dependencies. This real-world workload stresses the CPU, memory subsystem, and compiler toolchain in ways that synthetic benchmarks often miss. I perform three clean compilation runs on each system and average the results.

The results were striking:

- Orange Pi 5 Max (ARM64, RK3588, 8 cores): 62.35 seconds average

- LattePanda IOTA (x86_64, Intel N150, 4 cores): 72.21 seconds average

- Raspberry Pi 5 (ARM64, BCM2712, 4 cores): 76.65 seconds average

- Raspberry Pi CM5 (ARM64, BCM2712, 4 cores): ~74 seconds average

- Orange Pi RV2 (RISC-V, Ky X1, 8 cores): 650.60 seconds average

The Orange Pi RV2's compilation times of 661.25, 647.39, and 643.16 seconds averaged out to 650.60 seconds - more than ten times slower than the Orange Pi 5 Max and nearly nine times slower than the Raspberry Pi 5. Despite having eight cores compared to the Pi 5's four, the RISC-V platform lagged dramatically behind.

This performance gap isn't simply about clock speeds or core counts. The Orange Pi RV2 runs at 1.6 GHz compared to the Pi 5's 2.4 GHz, but that 1.5x difference in frequency doesn't explain a 10x difference in compilation time. Instead, we're seeing the combined effect of several factors:

- Processor microarchitecture maturity - ARM's Cortex-A76 represents over a decade of iterative improvement, while the Ky X1 is a first-generation design

- Compiler optimization - LLVM's ARM backend has been optimized for years, while RISC-V support is much newer

- Memory subsystem performance - the Ky X1's memory controller and cache hierarchy appear significantly less optimized

- Single-threaded performance - compilation is often limited by single-threaded tasks, where the ARM cores have a significant advantage

It's worth noting that the Orange Pi RV2 showed good consistency across runs, with only about 2.8 percent variation between the fastest and slowest compilation. This suggests the hardware itself is stable; it's simply not competitive with current ARM or x86 offerings for this workload.

The Ecosystem Challenge: Toolchains and Software

Beyond raw performance, the RISC-V ecosystem faces significant maturity challenges. This became evident when attempting to run llama.cpp, the popular framework for running large language models locally. Following Jeff Geerling's guide for building llama.cpp on RISC-V, I immediately hit toolchain issues.

The llama.cpp build system detected RISC-V vector extensions and attempted to compile with -march=rv64gc_zfh_v_zvfh_zicbop, enabling hardware support for floating-point operations and vector processing. However, the GCC 13.3.0 compiler shipping with Orange Pi's Linux distribution didn't fully support these extensions, producing errors about unexpected ISA strings.

The workaround was to disable RISC-V vector support entirely:

cmake -B build -DLLAMA_CURL=OFF -DGGML_RVV=OFF -DGGML_NATIVE=OFF

By compiling with basic rv64gc instructions only - essentially the baseline RISC-V instruction set without advanced SIMD capabilities - the build succeeded. But this immediately highlights a key ecosystem problem: the mismatch between hardware capabilities, compiler support, and software assumptions.

On ARM or x86 platforms, these issues were solved years ago. When you compile llama.cpp on a Raspberry Pi 5, it automatically detects and uses NEON SIMD instructions. On x86, it leverages AVX2 or AVX-512 if available. The toolchain, runtime detection, and fallback mechanisms all work seamlessly because they've been tested and refined over countless deployments.

RISC-V is still working through these growing pains. The vector extensions exist in the specification and are implemented in hardware on some processors, but compiler support varies, software doesn't always detect capabilities correctly, and fallback paths aren't always reliable. This forced me to compile llama.cpp in its least optimized mode, guaranteeing compatibility but leaving significant performance on the table.

Running LLMs on RISC-V: TinyLlama Performance

Despite the toolchain challenges, I successfully built llama.cpp and downloaded TinyLlama 1.1B in Q4_K_M quantization - a relatively small language model suitable for testing on resource-constrained devices. Running inference revealed exactly what you'd expect given the compilation benchmarks: functional but slow performance.

Prompt processing achieved 0.87 tokens per second, taking 1,148 milliseconds per token to encode the input. Token generation during the actual response was even slower at 0.44 tokens per second, or 2,250 milliseconds per token. To generate a 49-token response to "What is RISC-V?" took 110 seconds total.

For context, the same TinyLlama model on a Raspberry Pi 5 typically achieves 5-8 tokens per second, while the LattePanda IOTA manages 8-12 tokens per second depending on quantization. High-end ARM boards like the Orange Pi 5 Max can exceed 15 tokens per second with this model. The Orange Pi RV2's 0.44 tokens per second puts it roughly 11-34x slower than comparable ARM and x86 platforms.

The LLM did produce correct output, successfully explaining RISC-V as "a software-defined architecture for embedded and real-time systems" before noting it was "open-source and community-driven." The accuracy of the output confirms that the RISC-V platform is functionally correct - it's running the same model with the same weights and producing equivalent results. But the performance makes interactive use impractical for anything beyond basic testing and development.

What makes this particularly interesting is that we disabled vector instructions entirely. On ARM and x86 platforms, SIMD instructions provide massive speedups for the matrix multiplications that dominate LLM inference. The Orange Pi RV2 theoretically has vector extensions that could provide similar acceleration, but the immature toolchain forced us to leave them disabled. When RISC-V compiler support matures and llama.cpp can reliably use these hardware capabilities, we might see 2-4x performance improvements - though that would still leave RISC-V trailing ARM significantly.

The State of RISC-V SBCs: Pioneering Territory

It's important to contextualize these results within the broader RISC-V SBC landscape. These boards are extraordinarily new to the market. While ARM-based SBCs have evolved over 12+ years since the original Raspberry Pi, and x86 SBCs have existed even longer, RISC-V platforms aimed at developers and hobbyists have only emerged in the past two years.

The Orange Pi RV2 is essentially a first-generation product in a first-generation market. For comparison, the original Raspberry Pi from 2012 featured a single-core ARM11 processor running at 700 MHz and struggled with basic desktop tasks. Nobody expected it to compete with contemporary x86 systems; it was revolutionary simply for existing at a $35 price point and running Linux.

RISC-V is in a similar position today. The existence of an eight-core RISC-V SBC that can boot Ubuntu, compile complex software, and run large language models is itself remarkable. Five years ago, RISC-V was primarily found in microcontrollers and academic research chips. The progress to application-class processors running general-purpose operating systems has been rapid.

The ecosystem is growing faster than most observers expected. Major distributions like Debian, Fedora, and Ubuntu now provide official RISC-V images. The Rust programming language has first-class RISC-V support in its compiler. Projects like llama.cpp, even with their current limitations, are actively working on RISC-V optimization. Hardware vendors beyond SiFive and Chinese manufacturers are beginning to show interest, with Qualcomm and others investigating RISC-V for specific use cases.

What we're seeing with the Orange Pi RV2 isn't a mature product competing with established platforms - it's a pioneer platform demonstrating what's possible and revealing where work remains. The 10x performance gap versus ARM isn't a fundamental limitation of the RISC-V architecture; it's a measure of how much optimization work ARM has received over the past decade that RISC-V hasn't yet enjoyed.

Where RISC-V Goes From Here

The question isn't whether RISC-V will improve, but how quickly and how much. Several factors suggest significant progress in the near term:

Compiler maturity will improve rapidly as RISC-V gains adoption. LLVM and GCC developers are actively optimizing RISC-V backends, and major software projects are adding RISC-V-specific optimizations. The vector extension issues I encountered will be resolved as compilers catch up with hardware capabilities.

Processor implementations will evolve quickly. The Ky X1 in the Orange Pi RV2 is an early design, but Chinese semiconductor companies are investing heavily in RISC-V, and Western companies are beginning to follow. Second and third-generation designs will benefit from lessons learned in these first products.

Software ecosystem development is accelerating. Critical applications are being ported and optimized for RISC-V, from machine learning frameworks to databases to web servers. As this software matures, RISC-V systems will become more practical for real workloads.

The standardization of extensions will help. RISC-V's modular approach allows vendors to pick and choose which extensions to implement, but this creates fragmentation. As the ecosystem consolidates around standard profiles - baseline feature sets that software can depend on - compatibility and optimization will improve.

However, RISC-V faces challenges that ARM and x86 don't. The lack of a dominant vendor means fragmentation is always a risk. The openness that makes RISC-V attractive also means there's no single company with ARM or Intel's resources pushing the architecture forward. Progress depends on collective ecosystem development rather than centralized decision-making.

For hobbyists and developers today, RISC-V boards like the Orange Pi RV2 serve a specific purpose: experimentation, learning, and contributing to ecosystem development. If you want the fastest compilation times, most compatible software, or best performance per dollar, ARM or x86 remain superior choices. But if you want to be part of an emerging architecture, contribute to open-source development, or simply understand an alternative approach to processor design, RISC-V offers unique opportunities.

Conclusion: A Promising Start

The Orange Pi RV2 demonstrates both the promise and the current limitations of RISC-V in the single board computer space. It's a functional, stable platform that successfully runs complex workloads - just not quickly compared to established alternatives. The 650-second compilation times and 0.44 tokens-per-second LLM inference are roughly 10x slower than comparable ARM platforms, but they work correctly and consistently.

This performance gap isn't surprising or condemning. It reflects where RISC-V is in its maturity curve: early, promising, but not yet optimized. The architecture itself has no fundamental limitations preventing it from reaching ARM or x86 performance levels. What's missing is time, optimization work, and ecosystem development.

For anyone considering the Orange Pi RV2 or similar RISC-V boards, set expectations appropriately. This isn't a Raspberry Pi 5 competitor in raw performance. It's a development platform for exploring a new architecture, contributing to open-source projects, and learning about processor design. If those goals align with your interests, the Orange Pi RV2 is a fascinating platform. If you need maximum performance for compilation, machine learning, or general computing, stick with ARM or x86 for now.

But watch this space. RISC-V is moving faster than most expected, and platforms like the Orange Pi RV2 are pushing the boundaries of what's possible with open processor architectures. The 10x performance gap today might be 3x in two years and negligible in five. We're witnessing the early days of a potential revolution in processor architecture, and being able to participate in that development is worth more than a few minutes of faster compile times.

The future of computing might not be exclusively ARM or x86. If RISC-V continues its current trajectory, we could see a genuinely competitive third architecture in the mainstream within this decade. The Orange Pi RV2 is an early step on that journey - imperfect, slow by current standards, but undeniably significant.