AR-15

The AR-15 rifle has become an ubiquitous presence in modern American life, with a rich and complex history that spans over six decades. Its origins date back to the late 1950s, when ArmaLite first developed the rifle as a lightweight, compact, and reliable option for military use. However, its influence extends far beyond its intended purpose as a tool of war. Today, the AR-15 is an iconic symbol of American firearms culture, with over 10 million units sold in the United States alone. Its impact can be seen in popular media, from Hollywood blockbusters to video games, where it's often depicted as a sleek and powerful instrument of justice.

But the AR-15's significance goes beyond its cultural cache. It has also played a significant role in shaping American politics, particularly when it comes to Second Amendment rights and gun control legislation. The rifle's popularity has sparked heated debates over its use as a civilian firearm, with some arguing that it's too powerful for non-military purposes, while others see it as an essential tool for self-defense and recreational shooting. Despite the controversy surrounding it, the AR-15 remains one of the most popular firearms in the United States, with sales showing no signs of slowing down.

As we delve into the history and context surrounding the AR-15, it becomes clear that its impact is far more nuanced than a simple tale of good vs. evil or pro-gun vs. anti-gun. The rifle's story is intertwined with the very fabric of American society, reflecting our values, our fears, and our aspirations. By examining the complex and multifaceted history of the AR-15, we can gain a deeper understanding not only of the firearms industry but also of ourselves as a nation.

The development of the AR-15 rifle is a story that spans several decades, influenced by various factors, including technological advancements, military needs, and societal trends. The journey began in the 1950s when ArmaLite, a subsidiary of Fairchild Aircraft, started working on a new type of rifle designed to be lightweight, compact, and reliable. Led by chief engineer Jim Sullivan, the team at ArmaLite drew inspiration from the FN FAL (Fusil Automatique Léger), a Belgian-made assault rifle that had gained popularity in Europe. The FN FAL's design, particularly its use of a stamped steel receiver and a gas piston operating system, influenced the development of the AR-10, the precursor to the AR-15.

However, the AR-10 was not without its challenges, and it ultimately failed to gain traction as a military rifle due to reliability issues. Despite this setback, ArmaLite continued to refine their design, and in 1958, they introduced the AR-15, a smaller-caliber version of the AR-10. The AR-15's design made it an attractive option for the military, which was looking to replace its aging M14 rifles with a more modern and efficient firearm.

The US Army's interest in the AR-15 began in the early 1960s, when they were seeking a new rifle that could provide improved accuracy and reliability. The army's requirements included a rifle that could fire a high-velocity round, had a low recoil, and was lightweight. ArmaLite's AR-15 design met these requirements, and the company began working with the US Army to refine the rifle for military use.

In 1963, the US Army adopted the AR-15 as the M16, and by the mid-1960s, it had become the standard-issue rifle for American troops in Vietnam. The M16's adoption marked the beginning of a new era in military firearms, characterized by smaller-caliber, high-velocity rounds and lightweight designs. As the Vietnam War raged on, the AR-15 (and its military variant, the M16) gained notoriety due to its widespread use and perceived flaws, such as jamming issues and lack of stopping power.

However, this controversy also contributed to the rifle's popularity among civilians, who saw it as a symbol of American ingenuity and innovation. By the 1970s, civilian versions of the AR-15 had become increasingly popular, particularly among target shooters and hunters. The rise of the AR-15 also coincided with the growing interest in modern firearms among American civilians.

The AR-15's popularity can be attributed to its versatility and adaptability. The rifle is highly customizable, allowing users to modify it to suit their needs. This customization option has made the AR-15 a favorite among gun enthusiasts, who see it as a platform that can be tailored to fit various shooting styles and applications.

The AR-15's popularity also extends beyond recreational shooting. Law enforcement agencies have adopted the rifle for use in tactical operations, where its high accuracy and reliability make it an effective tool. Additionally, some military units continue to use variations of the M16 in specialized roles, such as sniper rifles and designated marksman rifles.

Despite its widespread adoption and popularity, the AR-15 has also been involved in several controversies over the years. Some have criticized the rifle for being too complex and prone to jamming issues, while others have raised concerns about its use in mass shootings. However, these criticisms have not diminished the rifle's popularity among gun enthusiasts.

In fact, the AR-15's controversy has contributed to its mystique and allure. Many see it as a symbol of American ingenuity and innovation, representing both the country's military might and its civilian fascination with firearms. The rifle's adaptability and customization options have made it a favorite among gun enthusiasts, who continue to modify and upgrade their AR-15s to suit their needs.

The AR-15's popularity also extends beyond recreational shooting, with law enforcement agencies and military units adopting the rifle for use in tactical operations. Despite its controversies, the AR-15 remains an iconic symbol of American gun culture, representing both the country's military might and its civilian fascination with firearms.

The AR-15 is a versatile and widely used semi-automatic rifle that has been in production for over five decades. Its design and functionality have made it a popular choice among civilian shooters, law enforcement agencies, and military units around the world. In this section, we will delve into the details of the AR-15's design and technical characteristics, exploring its major components, operating system, and performance capabilities.

Upper Receiver

The upper receiver is the top half of the AR-15 rifle, housing the barrel, gas system, and sighting components. It is typically made from aluminum or steel and features a Picatinny rail for mounting optics, lights, and other accessories. The upper receiver also contains the forward assist, which helps to ensure that the bolt carrier group (BCG) is properly seated in the chamber.

Lower Receiver

The lower receiver is the bottom half of the AR-15 rifle, housing the magazine well, pistol grip, and stock. It is typically made from aluminum or polymer materials and features a buffer tube that connects to the upper receiver. The lower receiver also contains the fire control group (FCG), which includes the trigger, hammer, and safety selector.

Barrel

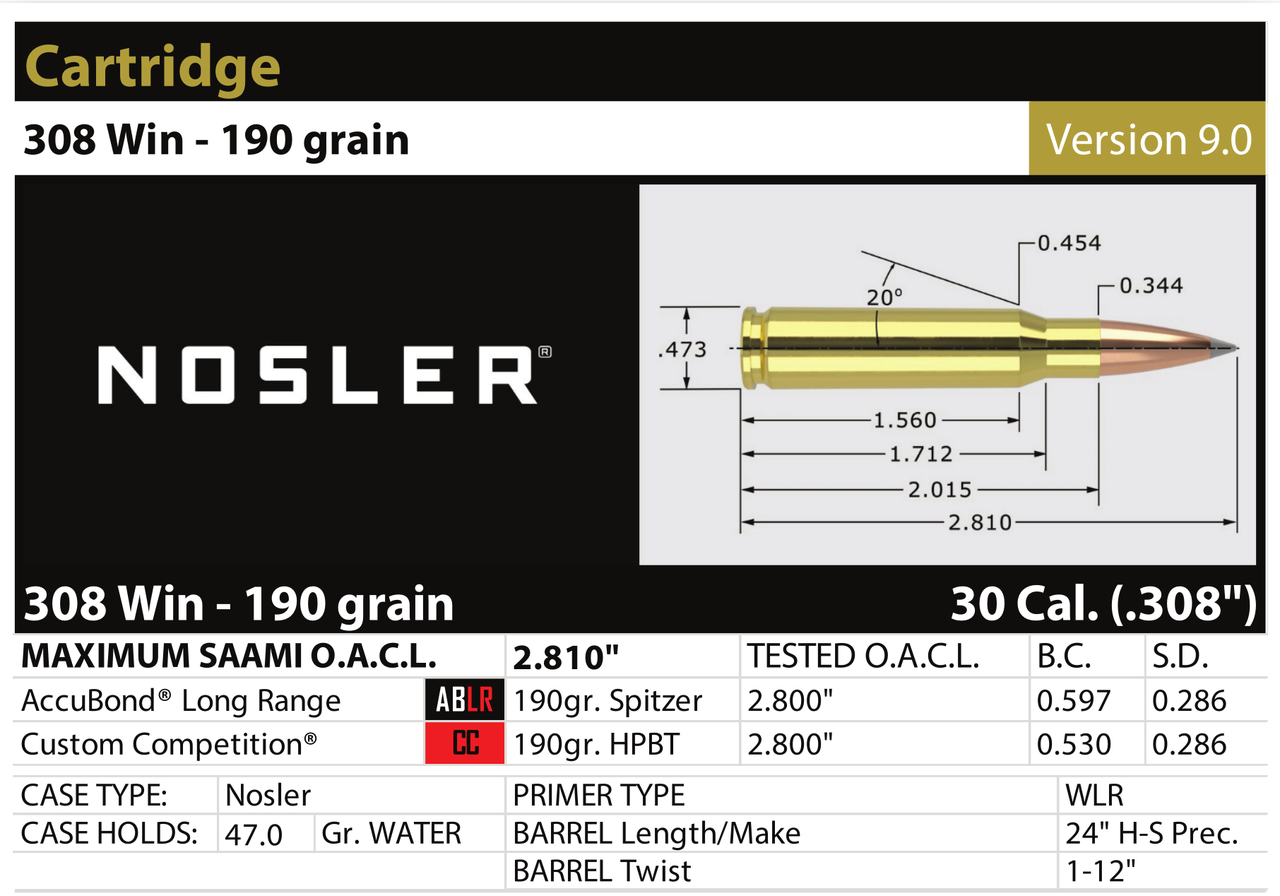

The barrel of the AR-15 rifle is available in various lengths and calibers, ranging from 14.5 inches (368 mm) to 24 inches (610 mm) in length and from .223 Remington to .308 Winchester in caliber. The barrel is typically made from steel or chrome-molybdenum and features a rifled bore that imparts spin to the bullet.

Stock

The stock of the AR-15 rifle is designed to provide a comfortable shooting position for the user. It is typically made from polymer materials and features an adjustable length of pull (LOP) and cheek rest. The stock also houses the buffer tube, which connects to the lower receiver.

Operating System

The AR-15 rifle operates using a gas piston system, direct impingement (DI) system, or piston-driven system. These systems use the high-pressure gases produced by the firing cartridge to cycle the bolt carrier group and eject the spent casing.

- Gas Piston System: The gas piston system uses a piston that is driven by the high-pressure gases in the barrel. The piston drives the BCG rearward, extracting the spent casing from the chamber.

- Direct Impingement (DI) System: The DI system uses the high-pressure gases directly to drive the BCG rearward. This system is simpler and more compact than the gas piston system but can be less reliable in certain applications.

- Piston-Driven System: The piston-driven system uses a combination of gas piston and DI systems to cycle the BCG.

Accuracy

The AR-15 rifle is known for its accuracy, with many users reporting sub-MOA (minute of angle) groups at 100 yards. The rifle's accuracy is due in part to its free-floating barrel, which allows it to vibrate freely without interference from the stock or other components. Additionally, the AR-15's sighting system, including the front sight post and rear peep sight, provides a precise aiming point for the user.

Reliability

The AR-15 rifle is known for its reliability, with many users reporting thousands of rounds fired without malfunction. The rifle's reliability is due in part to its simple operating system, which has fewer moving parts than other rifles on the market. Additionally, the AR-15's use of a piston or DI system helps to reduce the amount of fouling and debris that can accumulate in the chamber.

Durability

The AR-15 rifle is known for its durability, with many users reporting years of service without significant wear or tear. The rifle's components are designed to withstand heavy use, including the barrel, which is typically made from high-strength steel alloys. Additionally, the AR-15's stock and other polymer components are designed to be impact-resistant and can withstand rough handling.

In conclusion, the AR-15 rifle is a versatile and widely used semi-automatic rifle that has been in production for over five decades. Its design and functionality have made it a popular choice among civilian shooters, law enforcement agencies, and military units around the world. The rifle's accuracy, reliability, and durability make it an excellent choice for a variety of applications, including hunting, target shooting, and tactical operations.

The AR-15's operating system, which includes gas piston, DI, and piston-driven systems, provides a reliable and efficient means of cycling the bolt carrier group and ejecting spent casings. The rifle's components, including the upper receiver, lower receiver, barrel, and stock, are designed to work together seamlessly to provide a smooth-shooting experience.

Overall, the AR-15 rifle is an excellent choice for anyone looking for a reliable and accurate semi-automatic rifle that can withstand heavy use in a variety of applications.

The "black rifle" phenomenon refers to the widespread cultural fascination with tactical firearms, particularly the AR-15 rifle, in the late 20th century. This phenomenon can be understood within the historical context of the 1980s and 1990s gun culture in the United States. During this period, there was a growing interest in tactical shooting sports, driven in part by the popularity of competitive shooting disciplines such as IPSC (International Practical Shooting Confederation) and IDPA (International Defensive Pistol Association). This movement was also fueled by the rise of law enforcement and military tactical training programs, which emphasized the use of specialized firearms and equipment. The AR-15 rifle, with its sleek black design and modular components, became an iconic symbol of this cultural trend. Its popularity was further amplified by the proliferation of gun magazines, books, and videos that featured the rifle in various contexts, from hunting to self-defense.

The impact of the "black rifle" phenomenon on popular media has been significant. Movies such as "Predator)" (1987) and "Terminator 2: Judgment Day" (1991) prominently feature AR-15-style rifles, often depicted as futuristic or high-tech firearms. Video games such as "Doom)" (1993) and "Counter-Strike" (1999) also popularized the rifle's image, allowing players to wield virtual versions of the firearm in various scenarios. Television shows like "The A-Team" (1983-1987) and "Miami Vice" (1984-1990) frequently featured characters using AR-15-style rifles, further solidifying their place in the popular imagination. The rifle's influence on civilian shooting sports has also been profound. The rise of tactical 3-gun competitions and practical shooting disciplines has created a new generation of shooters who prize the AR-15's versatility and accuracy. Law enforcement agencies have also adopted the rifle as a standard-issue firearm, often using it in SWAT teams and other specialized units. Today, the "black rifle" phenomenon continues to shape American gun culture, with the AR-15 remaining one of the most popular and iconic firearms on the market. Its enduring popularity is a testament to its innovative design, versatility, and the cultural significance it has accumulated over the years.

The 1994 Assault Weapons Ban (AWB) was a landmark piece of legislation that aimed to regulate certain types of firearms, including the AR-15. Signed into law by President Bill Clinton, the AWB prohibited the manufacture and sale of new assault-style rifles, including those with features such as folding stocks, pistol grips, and bayonet mounts. The law also imposed a 10-year ban on the possession of magazines holding more than 10 rounds of ammunition. The AR-15 was specifically targeted by the AWB due to its popularity among civilians and its perceived similarity to military-style rifles. However, the law contained several loopholes that allowed manufacturers to modify their designs and continue producing similar firearms. For example, many manufacturers began producing "post-ban" AR-15s with features such as fixed stocks and non-threaded barrels, which were exempt from the ban.

Despite its intentions, the AWB had a limited impact on reducing gun violence. Many studies have shown that the law did not significantly reduce the overall number of firearms-related deaths or injuries in the United States. Additionally, the ban was often circumvented by manufacturers who simply modified their designs to comply with the new regulations. The AWB also created a thriving market for "pre-ban" AR-15s, which were highly sought after by collectors and enthusiasts. When the ban expired in 2004, many of these restrictions were lifted, allowing manufacturers to once again produce firearms with previously banned features. In recent years, there have been numerous attempts at both the state and federal levels to regulate the AR-15 and other assault-style rifles. For example, California has implemented a number of laws restricting the sale and possession of certain types of firearms, including those with detachable magazines and folding stocks.

The ongoing debate over Second Amendment rights and gun control continues to be a contentious issue in American politics. While proponents of stricter regulations argue that they are necessary to reduce gun violence and protect public safety, opponents contend that such laws infringe upon the constitutional right to bear arms. The AR-15 has become a lightning rod for this debate, with many gun control advocates singling out the rifle as a symbol of the types of firearms that should be restricted or banned. However, supporters of the Second Amendment argue that the AR-15 is a popular and versatile firearm that is used by millions of law-abiding citizens for hunting, target shooting, and self-defense. As the debate continues, it remains to be seen whether new regulations will be implemented at the federal or state levels, or if the status quo will remain in place.

Federal laws play a significant role in governing the sale and ownership of the AR-15. The National Firearms Act (NFA) is one key piece of legislation that regulates certain types of firearms, including short-barreled rifles and machine guns. Enacted in 1934, the NFA requires individuals to register these specific types of firearms with the Bureau of Alcohol, Tobacco, Firearms and Explosives (ATF). The registration process involves submitting an application, paying a fee, and providing detailed information about the firearm, including its make, model, and serial number. Additionally, the NFA imposes certain requirements on individuals who possess registered firearms, such as maintaining accurate records and reporting any changes in ownership or possession.

The Gun Control Act (GCA) is another key piece of legislation that regulates the sale and possession of firearms, including the AR-15. Enacted in 1968, the GCA regulates the interstate commerce of firearms and requires licensed dealers to conduct background checks on buyers. The GCA defines a "firearm" as any weapon that can be used to fire a projectile, including handguns, rifles, and shotguns. The law also establishes certain categories of individuals who are prohibited from possessing firearms, such as felons, fugitives, and those with a history of mental illness or substance abuse. Licensed dealers must conduct background checks on buyers through the National Instant Background Check System (NICS), which is maintained by the FBI.

In addition to regulating the sale and possession of firearms, the GCA also imposes certain requirements on licensed dealers and manufacturers. For example, dealers must maintain accurate records of all firearm transactions, including sales, purchases, and transfers. Manufacturers must mark each firearm with a unique serial number and provide detailed information about the firearm's make, model, and characteristics. The GCA also establishes penalties for individuals who violate its provisions, including fines and imprisonment. Overall, the NFA and GCA work together to regulate the sale and possession of firearms in the United States, including the AR-15.

State-specific laws regulating the AR-15 vary widely across the country, reflecting the diverse attitudes towards firearms ownership among different states. Some states, such as California and New York, have implemented strict regulations on the sale and possession of assault-style rifles, including the AR-15. These regulations may include requirements for registration, background checks, and magazine capacity limits. For example, California's Assault Weapons Ban prohibits the sale and possession of certain semi-automatic firearms, including the AR-15, unless they are registered with the state. The law also requires that these firearms be equipped with certain features, such as a fixed stock and a 10-round or smaller magazine.

In contrast, other states have more lenient laws governing firearms ownership. Arizona and Texas, for example, have relatively few restrictions on the sale and possession of assault-style rifles. In Arizona, individuals may purchase an AR-15 without undergoing a background check, unless they are prohibited from owning a firearm under federal law. Similarly, in Texas, there is no requirement that individuals register their firearms or undergo a background check before purchasing an assault-style rifle. These differing state laws can create confusion for gun owners and dealers who operate in multiple states. For example, a dealer may be required to follow strict regulations when selling an AR-15 in California, but not when selling the same firearm in Arizona.

The conflicting state laws governing firearms ownership have also led to litigation between gun rights groups and state governments. For example, the National Rifle Association (NRA) has challenged California's Assault Weapons Ban in court, arguing that it violates individuals' Second Amendment right to bear arms. Similarly, other gun rights groups have challenged New York's SAFE Act, which regulates the sale and possession of assault-style rifles, including the AR-15. These lawsuits highlight the ongoing debate over firearms ownership and regulation in the United States, with different states taking varying approaches to regulating the sale and possession of firearms like the AR-15.

Federal laws play a significant role in governing the sale and ownership of the AR-15. The National Firearms Act (NFA) and the Gun Control Act (GCA) are two key pieces of legislation that regulate the sale and possession of firearms, including the AR-15. The NFA requires individuals to register certain types of firearms, such as short-barreled rifles and machine guns, with the Bureau of Alcohol, Tobacco, Firearms and Explosives (ATF). The GCA regulates the interstate commerce of firearms and requires licensed dealers to conduct background checks on buyers. Additionally, the GCA prohibits certain individuals, such as felons and those with a history of mental illness, from possessing firearms.

State-specific laws regulating the AR-15 vary widely across the country. Some states, such as California and New York, have implemented strict regulations on the sale and possession of assault-style rifles, including the AR-15. These regulations may include requirements for registration, background checks, and magazine capacity limits. Other states, such as Arizona and Texas, have more lenient laws governing firearms ownership. In some cases, state laws may conflict with federal regulations, creating confusion for gun owners and dealers. For example, California's strict regulations on assault-style rifles have been challenged in court by the National Rifle Association (NRA) and other gun rights groups.

Court cases related to the AR-15 have significant implications for firearms law. One notable case is District of Columbia v. Heller (2008), in which the Supreme Court ruled that individuals have a constitutional right to possess a firearm for traditionally lawful purposes, such as self-defense within the home. This ruling has been interpreted by some courts to limit the ability of states and local governments to regulate firearms ownership. Another significant case is McDonald v. City of Chicago (2010), in which the Supreme Court ruled that the Second Amendment applies to state and local governments, not just the federal government.

The ongoing debate over Second Amendment rights and gun control continues to be a contentious issue in American politics. Proponents of stricter regulations argue that they are necessary to reduce gun violence and protect public safety, while opponents contend that such laws infringe upon the constitutional right to bear arms. The AR-15 has become a lightning rod for this debate, with many gun control advocates singling out the rifle as a symbol of the types of firearms that should be restricted or banned. However, supporters of the Second Amendment argue that the AR-15 is a popular and versatile firearm that is used by millions of law-abiding citizens for hunting, target shooting, and self-defense.

The AR-15 has been involved in several high-profile mass shootings, including the Sandy Hook Elementary School shooting in 2012 and the Marjory Stoneman Douglas High School shooting in Parkland, Florida in 2018. These incidents have sparked widespread debate and outrage over the accessibility of semi-automatic rifles like the AR-15. Many argue that these types of firearms are not suitable for civilian use and should be restricted or banned due to their potential for mass destruction. The Sandy Hook shooting, which resulted in the deaths of 26 people, including 20 children, was carried out with an AR-15 rifle that had been modified with a high-capacity magazine. This modification allowed the shooter to fire multiple rounds without needing to reload, increasing the speed and efficiency with which he could inflict harm.

The ease with which the shooter at Sandy Hook was able to inflict such widespread harm has led many to question whether semi-automatic rifles like the AR-15 should be allowed in public circulation. The high-capacity magazine used in the shooting was designed for military use, where soldiers are often faced with multiple targets and need to be able to fire rapidly without reloading. However, this same feature makes it much easier for a shooter to inflict mass casualties in a civilian setting. Many have argued that such magazines should be banned or restricted to prevent future tragedies like Sandy Hook.

The involvement of the AR-15 in other high-profile mass shootings has further fueled the debate over its suitability for civilian use. For example, the Marjory Stoneman Douglas High School shooting in Parkland, Florida was carried out with an AR-15 rifle that had been purchased by the shooter just a few days earlier. The speed and ease with which he was able to purchase the rifle has raised concerns about the effectiveness of background checks and other regulations designed to prevent such purchases. As the debate over gun control continues, many are calling for stricter regulations on semi-automatic rifles like the AR-15, or even an outright ban on their sale and possession.

The debate over whether the AR-15 is a "weapon of war" or a legitimate hunting rifle has been ongoing for years. Proponents of gun control argue that the AR-15's design and capabilities make it more suitable for military use than for civilian purposes such as hunting or target shooting. They point to its high rate of fire, large magazine capacity, and ability to accept modifications that enhance its lethality. These features, they argue, are not necessary for hunting or sporting purposes and serve only to increase the rifle's potential for harm in the wrong hands. For example, the AR-15's ability to fire multiple rounds quickly makes it more suitable for combat situations where soldiers need to lay down suppressive fire to pin down enemy forces.

On the other hand, supporters of the Second Amendment argue that the AR-15 is a legitimate sporting rifle that can be used for a variety of purposes, including hunting small game and competitive shooting sports. They point out that many hunters use semi-automatic rifles like the AR-15 to hunt larger game such as deer and other similarly sized animals. The rifle's accuracy and reliability make it well-suited for these purposes, they argue. Additionally, supporters of the AR-15 note that the rifle is highly customizable, allowing users to modify it to suit their specific needs and preferences. This customization capability, they argue, makes the AR-15 a versatile and practical choice for hunters and competitive shooters.

Despite these arguments, proponents of gun control remain unconvinced that the AR-15 is suitable for civilian use. They point out that the rifle's design and capabilities make it more similar to military firearms than traditional hunting rifles. For example, the AR-15's ability to accept a variety of accessories and modifications, including scopes, flashlights, and suppressors, makes it highly adaptable and versatile in combat situations. These features, they argue, are not necessary for hunting or sporting purposes and serve only to increase the rifle's potential for harm in the wrong hands. As the debate over gun control continues, the question of whether the AR-15 is a "weapon of war" or a legitimate hunting rifle remains a contentious issue.

The role of gun culture and media in shaping public perceptions of the AR-15 has been significant. The firearm's popularity among enthusiasts and its depiction in popular media, such as movies and video games, have contributed to its widespread recognition and appeal. For example, the AR-15 is often featured in first-person shooter video games, where it is portrayed as a versatile and powerful firearm that can be customized with various accessories. This portrayal has helped to fuel the rifle's popularity among gamers and enthusiasts alike. Additionally, the AR-15 is often depicted in movies and television shows as a military-grade firearm used by special forces or other elite units. These depictions have contributed to the rifle's reputation as a high-performance firearm that is capable of withstanding harsh environments.

However, this visibility has also led to a negative backlash against the rifle, with many people associating it with mass shootings and violence. The media's coverage of mass shootings involving the AR-15 has often perpetuated this narrative, creating a sense of public outrage and calls for stricter gun control measures. For example, after the Sandy Hook Elementary School shooting in 2012, which involved an AR-15 rifle, there was a significant increase in negative media coverage of the firearm. This coverage contributed to widespread public concern about the availability of semi-automatic rifles like the AR-15 and fueled demands for stricter regulations on their sale and ownership.

This dichotomy between the positive portrayal of the AR-15 in some circles and its negative depiction in others highlights the complex and multifaceted nature of the debate surrounding semi-automatic rifles like the AR-15. On one hand, enthusiasts and supporters of the Second Amendment see the AR-15 as a legitimate sporting rifle that is used for recreational purposes such as hunting and target shooting. On the other hand, critics of the firearm view it as a symbol of gun violence and mass shootings, and argue that its sale and ownership should be heavily regulated or banned altogether. As the debate over gun control continues to rage on, it remains clear that the AR-15 will remain at the center of this contentious issue for years to come.

In a bizarre and fascinating spectacle, a church in Pennsylvania made headlines for blessing couples and their AR-15 rifles. The ceremony, led by Pastor Sean Moon, was intended to celebrate the love between husbands and wives, as well as their firearms. The event, dubbed "Couples' Love Mass Wedding," saw over 200 couples gather at the World Peace and Unification Sanctuary in Newfoundland, Pennsylvania. As part of the ritual, each couple brought an AR-15 rifle with them, which they held throughout the ceremony. Pastor Moon, who is also the son of a prominent religious leader, blessed the rifles alongside the couples, praying for their love and commitment to one another.

This unusual event has sparked both amazement and outrage, highlighting the deep-seated cultural significance of firearms in some communities. The fact that an AR-15 rifle was chosen as the symbol of marital devotion is particularly striking, given its associations with mass shootings and gun violence. However, for Pastor Moon and his congregation, the AR-15 represents a different set of values - namely, the right to self-defense, patriotism, and traditional American culture. This ceremony demonstrates how, in some circles, firearms have become an integral part of identity and cultural expression. The event also underscores the extent to which the AR-15 has permeated popular culture, transcending its origins as a military-grade firearm.

As news of the blessing ceremony spread, it sparked heated debates about gun culture, religious freedom, and social norms. While some saw the event as a harmless celebration of love and commitment, others viewed it as a disturbing example of how firearms have become fetishized in American society. The controversy surrounding this event serves as a microcosm for the broader cultural divide between those who see guns as an integral part of their identity and way of life, and those who view them as instruments of violence and harm. As America grapples with its gun culture, events like this ceremony remind us that, for some people, firearms have become deeply embedded in their sense of self and community.

In a peculiar legal quirk, it is currently possible for individuals to manufacture their own AR-15 lower receiver, provided they do not sell or distribute the finished product. This loophole has led to a thriving community of machinists and DIY enthusiasts who have taken to milling their own lower receivers using computer-controlled machining tools. The process involves starting with a blank aluminum block, which is then precision-milled to create the complex shape and features required for an AR-15 lower receiver. For many in this community, the motivation behind manufacturing their own lower receiver is not driven by economic considerations, but rather as an exercise of one's legal rights. By creating their own firearm component, individuals can assert their Second Amendment freedoms and take control over their own self-defense.

However, not everyone has the luxury of owning a milling machine or the skills to operate one. This is where 3D printing comes into play. In recent years, advances in additive manufacturing technology have made it possible for hobbyists and enthusiasts to print high-quality AR-15 lower receivers using affordable 3D printers. The process involves creating a digital model of the desired design and the printing it on consumer grade 3d printing equipment. When the printing is complete, the resulting part can be machined or sanded to fit other components and create a functional rifle.

When 3D printing guns first emerged, it was seen as unconscionable by many and predicted chaos. However, this hysteria has largely subsided as the reality of the situation has become clearer. In fact, enthusiasts like Tim Hoffman of Hoffman Tactical have released high-quality 3d printable models of various AR platform weapons that are designed specifically for use with 3D printing technology. These designs take into account the limitations and capabilities of 3D printing, resulting in parts that are optimized for strength, durability, and reliability. With these advancements, it is now possible for individuals to create high-quality firearms components at home, opening up new possibilities for customization and innovation in the firearms industry.

The AR-15 rifle has become a polarizing symbol in American culture, representing both freedom and violence to different groups of people. On one hand, enthusiasts and supporters of the Second Amendment see the AR-15 as a legitimate sporting rifle used for recreational purposes such as hunting and target shooting. However, critics view it as a symbol of gun violence and mass shootings, arguing that its sale and ownership should be heavily regulated or banned altogether. This dichotomy is highlighted by the contrasting portrayals of the AR-15 in media coverage, with some outlets depicting it as a menacing instrument of death, while others showcase its use in sporting events and competitions.

The AR-15 rifle has become a polarizing symbol in American culture, representing both freedom and violence to different groups of people. On one hand, enthusiasts and supporters of the Second Amendment see the AR-15 as a legitimate sporting rifle used for recreational purposes such as hunting and target shooting. However, critics view it as a symbol of gun violence and mass shootings, arguing that its sale and ownership should be heavily regulated or banned altogether. This dichotomy is highlighted by the contrasting portrayals of the AR-15 in media coverage, with some outlets depicting it as a menacing instrument of death, while others showcase its use in sporting events and competitions.

The cultural significance of firearms has been demonstrated by unusual events such as a church ceremony in Pennsylvania where couples brought their AR-15 rifles to be blessed alongside their union. This spectacle sparked both amazement and outrage, highlighting the deep-seated cultural significance of firearms in some communities. For Pastor Sean Moon and his congregation, the AR-15 represents values such as self-defense, patriotism, and traditional American culture. However, others view it as a disturbing example of how firearms have become fetishized in American society. The event serves as a microcosm for the broader cultural divide between those who see guns as an integral part of their identity and way of life, and those who view them as instruments of violence and harm.

Advances in technology have also impacted the debate surrounding the AR-15, with the rise of 3D printing allowing individuals to create high-quality firearms components at home. While some initially predicted chaos and uncontrollable proliferation of guns, reality has shown that these fears were largely unfounded. Enthusiasts like Tim Hoffman have released high-quality 3D printable models optimized for strength, durability, and reliability. This development opens up new possibilities for customization and innovation in the firearms industry, allowing individuals to assert their Second Amendment freedoms and take control over their own self-defense. However, it also raises questions about regulation and accountability, highlighting the need for ongoing dialogue and debate on this complex issue.

Sources (in no particular ordering)

-

David Kopel, "The History of Firearm Magazines and Magazine Prohibitions", Albany Law Review, 2015

-

Gary Kleck, "Large-Capacity Magazines and the Casualty Counts in Mass Shootings", Justice Research and Policy, 2016

-

Christopher S. Koper, "An Updated Assessment of the Federal Assault Weapons Ban: Impacts on Gun Markets and Gun Violence, 1994-2003", National Institute of Justice, 2004

-

Nicholas J. Johnson, "Supply Restrictions at the Margins of Heller and the Abortion Analogue", Hastings Law Journal, 2009

-

Adam Winkler, "Gunfight: The Battle Over the Right to Bear Arms in America", W. W. Norton & Company, 2011

-

Robert J. Spitzer, "The Politics of Gun Control", Routledge, 2018

-

James Alan Fox, Monica J. DeLateur, "Mass Shootings in America: Moving Beyond Newtown", Homicide Studies, 2014

-

Philip J. Cook, Kristin A. Goss, "The Gun Debate: What Everyone Needs to Know", Oxford University Press, 2014

-

David B. Kopel, "The Great Gun Control War of the Twentieth Century—and Its Lessons for Gun Laws Today", Fordham Urban Law Journal, 2014

-

John R. Lott Jr., "More Guns, Less Crime: Understanding Crime and Gun Control Laws", University of Chicago Press, 2010

-

Garen J. Wintemute, "The Future of Firearm Violence Prevention: Building on Success", JAMA Internal Medicine, 2015

-

Gary Kleck, Marc Gertz, "Armed Resistance to Crime: The Prevalence and Nature of Self-Defense with a Gun", Journal of Criminal Law and Criminology, 1995

-

Daniel W. Webster, Jon S. Vernick, "Reducing Gun Violence in America: Informing Policy with Evidence and Analysis", Johns Hopkins University Press, 2013

-

Michael Siegel, Craig S. Ross, Charles King, "The Relationship Between Gun Ownership and Firearm Homicide Rates in the United States, 1981–2010", American Journal of Public Health, 2013

-

Philip J. Cook, Jens Ludwig, "The Social Costs of Gun Ownership", Journal of Public Economics, 2006

The

The

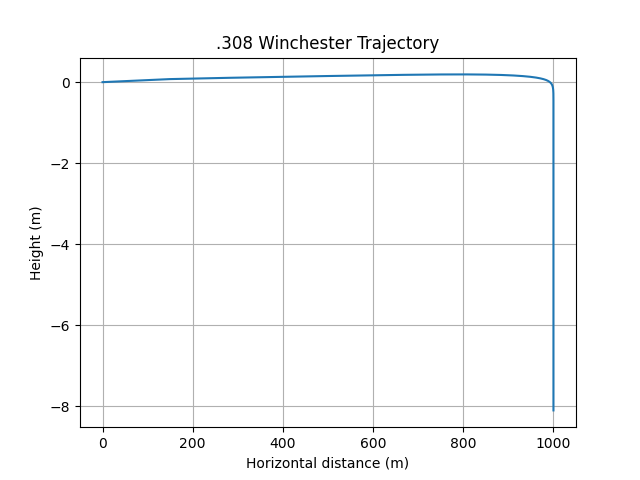

In the world of ballistics, precision is paramount. Whether it's a military operation, a hunting expedition, or a competitive shooting event, the trajectory of a projectile can make all the difference between success and failure. At the heart of this quest for accuracy lies the ballistic coefficient (BC), a fundamental concept that describes the aerodynamic efficiency of a projectile.

In the world of ballistics, precision is paramount. Whether it's a military operation, a hunting expedition, or a competitive shooting event, the trajectory of a projectile can make all the difference between success and failure. At the heart of this quest for accuracy lies the ballistic coefficient (BC), a fundamental concept that describes the aerodynamic efficiency of a projectile. While Euler's method provides a basic framework for approximating solutions to differential equations, more sophisticated techniques such as the

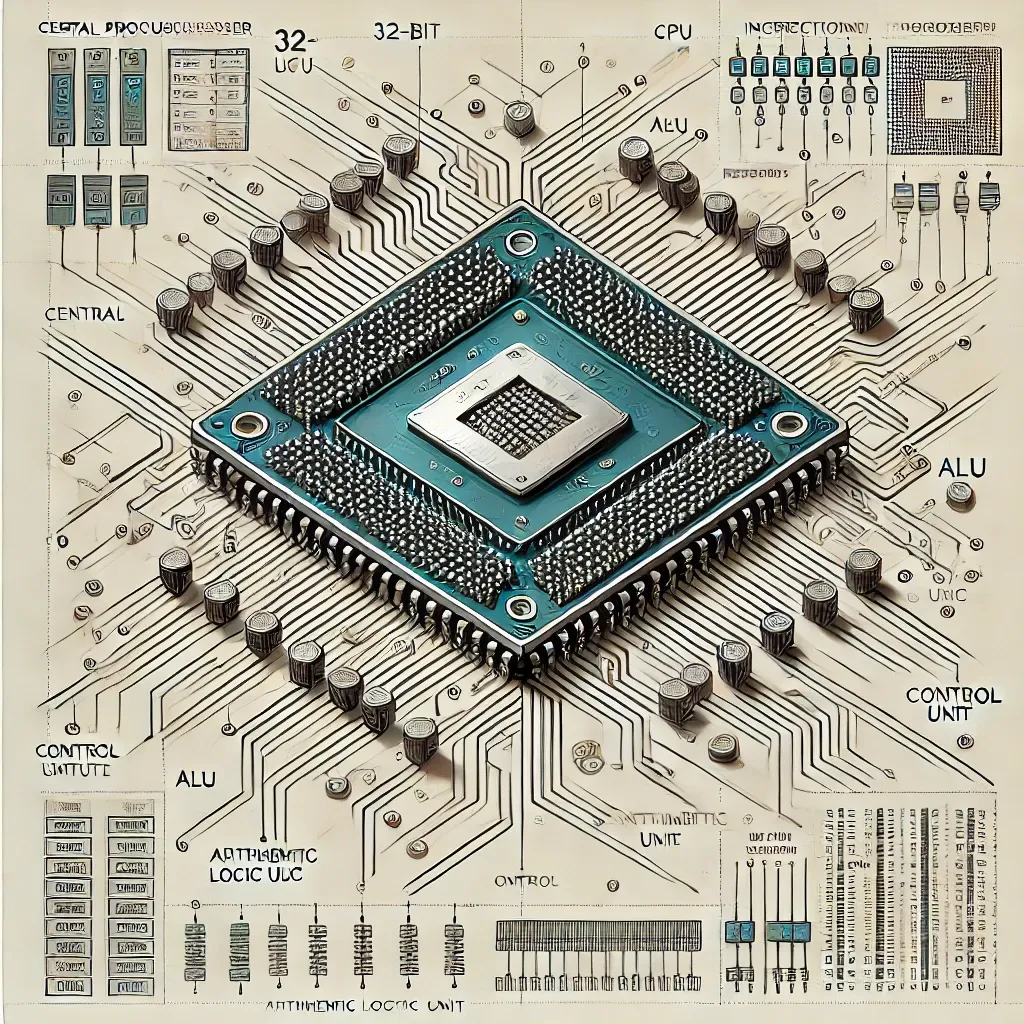

While Euler's method provides a basic framework for approximating solutions to differential equations, more sophisticated techniques such as the  The MIPS (MIPS Instruction Set) architecture is a RISC (Reduced Instruction Set Computing) processor designed by John Hennessy and David Patterson in the 1980s. It is one of the most widely used instruction set architectures in the world, with applications ranging from embedded systems to high-performance computing. The significance of MIPS lies in its simplicity, efficiency, and scalability, making it an ideal choice for a wide range of applications.

The MIPS (MIPS Instruction Set) architecture is a RISC (Reduced Instruction Set Computing) processor designed by John Hennessy and David Patterson in the 1980s. It is one of the most widely used instruction set architectures in the world, with applications ranging from embedded systems to high-performance computing. The significance of MIPS lies in its simplicity, efficiency, and scalability, making it an ideal choice for a wide range of applications. I am continuing with my detour from programming languages, single board computers, math, and financial markets to pen another piece on the Mesabi Iron Range; it is an expansion on a conversation I had with Pular Helium's geologist about iron mining's 140 year legacy on the land and its people.

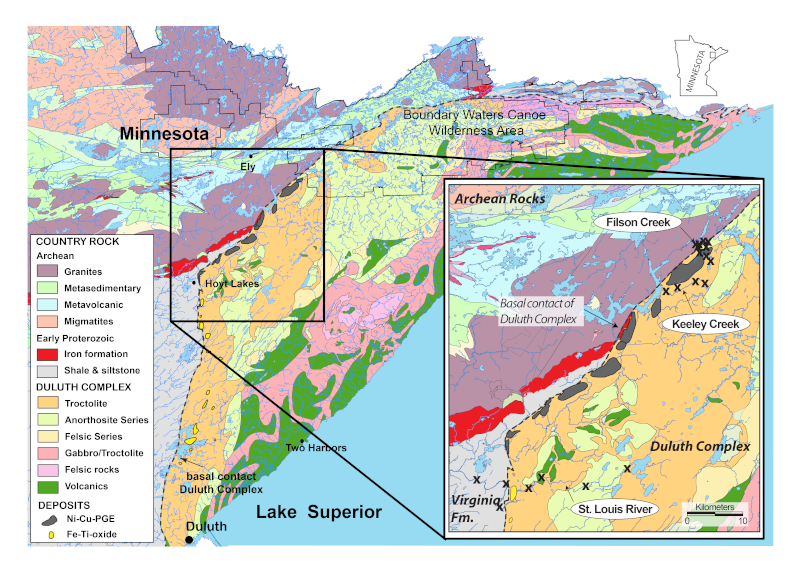

I am continuing with my detour from programming languages, single board computers, math, and financial markets to pen another piece on the Mesabi Iron Range; it is an expansion on a conversation I had with Pular Helium's geologist about iron mining's 140 year legacy on the land and its people. Iron mining in Minnesota's Mesabi Range has had significant environmental implications. One major issue is waste rock and tailings management, as large-scale open-pit extraction and processing of lower-grade taconite iron ore produce vast amounts of waste rock that are often deposited in nearby lakes and wetlands.

Iron mining in Minnesota's Mesabi Range has had significant environmental implications. One major issue is waste rock and tailings management, as large-scale open-pit extraction and processing of lower-grade taconite iron ore produce vast amounts of waste rock that are often deposited in nearby lakes and wetlands. I'm taking a detour from my usual topics of single board computers, programming languages, mathematics, machine learning, 3D printing and financial markets to write about the geology of a part of Minnesota that held a facinating secret until very recently.

I'm taking a detour from my usual topics of single board computers, programming languages, mathematics, machine learning, 3D printing and financial markets to write about the geology of a part of Minnesota that held a facinating secret until very recently. Granite is also present in the Duluth Complex, although it is less abundant than gabbro. This lighter-colored, coarse-grained rock is rich in silica and aluminum, and forms a distinctive suite of rocks that are different from the surrounding gabbro.

Granite is also present in the Duluth Complex, although it is less abundant than gabbro. This lighter-colored, coarse-grained rock is rich in silica and aluminum, and forms a distinctive suite of rocks that are different from the surrounding gabbro. The discovery of helium in the Dunka River area was a serendipitous event that occurred during a routine exploratory drilling project. Geologists were primarily focused on assessing the area's potential for copper, nickel, and platinum group metals, given the region's rich geological history tied to the Duluth Complex. However, during the drilling process, gas samples collected from the wellhead exhibited unusual properties, prompting further analysis. Using gas chromatography and mass spectrometry, the team identified a significant presence of helium, a rare and valuable element. This discovery was unexpected, as helium is typically associated with natural gas fields, and its presence in volcanic rock formations like those in the Duluth Complex was unprecedented.

The discovery of helium in the Dunka River area was a serendipitous event that occurred during a routine exploratory drilling project. Geologists were primarily focused on assessing the area's potential for copper, nickel, and platinum group metals, given the region's rich geological history tied to the Duluth Complex. However, during the drilling process, gas samples collected from the wellhead exhibited unusual properties, prompting further analysis. Using gas chromatography and mass spectrometry, the team identified a significant presence of helium, a rare and valuable element. This discovery was unexpected, as helium is typically associated with natural gas fields, and its presence in volcanic rock formations like those in the Duluth Complex was unprecedented. The history of Unix begins in the 1960s at Bell Labs, where a team of researchers was working on an operating system called Multics (Multiplexed

Information and Computing Service). Developed from 1965 to 1969 by a consortium including MIT, General Electric, and Bell Labs, Multics was one of

the first timesharing systems. Although it never achieved commercial success, it laid the groundwork for future operating systems.

The history of Unix begins in the 1960s at Bell Labs, where a team of researchers was working on an operating system called Multics (Multiplexed

Information and Computing Service). Developed from 1965 to 1969 by a consortium including MIT, General Electric, and Bell Labs, Multics was one of

the first timesharing systems. Although it never achieved commercial success, it laid the groundwork for future operating systems.