The NVIDIA Tesla P100: A Budget-Friendly Option for Deep Learning and Large Language Models

When it comes to accelerating artificial intelligence (AI) workloads, particularly deep learning and large language models, the latest high-end graphics processing units (GPUs) from NVIDIA tend to steal the spotlight. However, these cutting-edge GPUs often come with a hefty price tag that can be out of reach for many researchers, developers, and businesses.

But what if you could get similar performance at a fraction of the cost? Enter the NVIDIA Tesla P100, an older but still highly capable GPU that offers an attractive balance between performance and affordability. In this blog post, we'll explore why the Tesla P100 remains a viable option for AI applications like deep learning and large language models.

The History of the Pascal Architecture

In 2016, NVIDIA released their new Pascal) microarchitecture, which would go on to power some of the most powerful and efficient GPUs ever created. The Pascal architecture was a major departure from its predecessors, bringing numerous innovations and improvements that cemented NVIDIA's position as a leader in the GPU market.

Pre-Pascal Architectures

To understand the significance of the Pascal architecture, it's essential to look at the architectures that came before it. NVIDIA's previous architectures include:

- Fermi (2010): The Fermi) microarchitecture was NVIDIA's first attempt at creating a unified GPU architecture. It introduced parallel processing and supported up to 512 CUDA cores.

- Kepler (2012): Kepler) built upon the success of Fermi, introducing improvements in performance, power efficiency, and memory bandwidth. Kepler also introduced the concept of "boost" clocks, allowing GPUs to dynamically adjust their clock speeds based on workload demands. Units like the K80 are still available on eBay and, with the correct libraries, are still usable for things like PyTorch.

- Maxwell (2014): Maxwell) continued the trend of improving performance and power efficiency, with a focus on reducing leakage current and increasing transistor density.

The Pascal Architecture

Pascal was designed from the ground up to address the growing needs of emerging applications such as deep learning, artificial intelligence, and virtual reality. Key features of the Pascal architecture include:

- 16nm FinFET Process: Pascal was the first NVIDIA GPU to use a 16nm process node, which provided significant improvements in transistor density and power efficiency.

- GP100 and GP104 GPUs: The initial Pascal-based GPUs were the GP100 (also known as Tesla P100) and GP104. These GPUs featured up to 3,584 CUDA cores, 16 GB of HBM2 memory, and support for NVIDIA's Deep Learning SDKs.

- NVIDIA NVLink: Pascal introduced NVLink, a new interconnect technology that allowed for higher-bandwidth communication between the GPU and CPU or other devices.

- Simultaneous Multi-Projection (SMP): SMP enabled multiple GPUs to be used together in a single system, allowing for increased performance and scalability.

Pascal's Impact on AI and Deep Learning

The Pascal architecture played a significant role in the development of deep learning and artificial intelligence. The GP100 GPU, with its 16 GB of HBM2 memory and high-bandwidth interconnects, became an essential tool for researchers and developers working on deep learning projects.

Pascal's impact on AI and deep learning can be seen in several areas:

- Deep Learning Frameworks: Pascal supported popular deep learning frameworks such as TensorFlow, PyTorch, and Caffe. These frameworks leveraged the GPU's parallel processing capabilities to accelerate training times.

- GPU-Accelerated Training: Pascal enabled researchers and developers to train larger models on larger datasets, leading to significant improvements in model accuracy and overall performance.

Legacy of Pascal

Although the Pascal architecture has been superseded by newer architectures such as Volta), Turing), Ampere), Hopper) and Blackwell), its impact on the GPU industry and AI research remains significant. The innovations introduced in Pascal have continued to influence subsequent NVIDIA architectures, cementing the company's position as a leader in the field.

The Pascal architecture will be remembered for its role in enabling the growth of deep learning and artificial intelligence, and for paving the way for future generations of GPUs that continue to push the boundaries of what is possible.

Performance in Deep Learning Workloads

In deep learning, the Tesla P100 can handle popular frameworks like TensorFlow, PyTorch, and Caffe with ease. Its 3,584 CUDA cores provide ample parallel processing power to accelerate matrix multiplications, convolutions, and other compute-intensive operations.

Benchmarks from various sources indicate that the Tesla P100 can deliver:

- Up to 4.7 TFLOPS of single-precision performance

- Up to 9.5 GFLOPS of double-precision performance

- Support for NVIDIA's cuDNN library, which accelerates deep learning computations

While these numbers may not match those of newer GPUs like the A100 or V100, they are still more than sufficient for many deep learning workloads.

Performance in Large Language Models

Large language models have revolutionized the field of natural language processing (NLP) by enabling state-of-the-art results on a wide range of tasks, including text classification, sentiment analysis, and machine translation. However, these models require massive amounts of computational resources to train and fine-tune, making them challenging to work with.

The Tesla P100 is an ideal solution for training and fine-tuning large language models, thanks to its 16 GB of HBM2 memory and high-bandwidth interconnects. These features enable the Tesla P100 to deliver exceptional performance on a wide range of NLP tasks.

BERT Performance

BERT (Bidirectional Encoder Representations from Transformers) is a popular large language model that has achieved state-of-the-art results on many NLP tasks. The Tesla P100 can handle BERT training and fine-tuning with ease, thanks to its massive memory capacity and high-bandwidth interconnects.

Benchmarks indicate that the Tesla P100 can deliver up to 10x speedup over CPU-only training for BERT. This means that users can train and fine-tune their BERT models much faster on the Tesla P100 than they would on a traditional CPU-based system.

RoBERTa Performance

RoBERTa (Robustly Optimized BERT Pretraining Approach) is another popular large language model that has achieved state-of-the-art results on many NLP tasks. The Tesla P100 can also handle RoBERTA training and fine-tuning with ease, thanks to its massive memory capacity and high-bandwidth interconnects.

Benchmarks indicate that the Tesla P100 can deliver up to 5x speedup over CPU-only training for RoBERTA. This means that users can train and fine-tune their RoBERTA models much faster on the Tesla P100 than they would on a traditional CPU-based system.

XLNet Performance

XLNet (Extreme Language Modeling) is a large language model that has achieved state-of-the-art results on many NLP tasks. The Tesla P100 can also handle XLNet training and fine-tuning with ease, thanks to its massive memory capacity and high-bandwidth interconnects.

Benchmarks indicate that the Tesla P100 can deliver up to 4x speedup over CPU-only training for XLNet. This means that users can train and fine-tune their XLNet models much faster on the Tesla P100 than they would on a traditional CPU-based system.

Comparison with Other GPUs

The Tesla P100 is not the only GPU available in the market, but it offers exceptional performance and memory capacity compared to other GPUs. For example:

- The NVIDIA V100 has 16 GB of HBM2 memory and can deliver up to 8x speedup over CPU-only training for BERT.

- The AMD Radeon Instinct MI60 has 32 GB of HBM2 memory and can deliver up to 6x speedup over CPU-only training for BERT.

However, the Tesla P100 offers a unique combination of performance, memory capacity, and power efficiency that makes it an ideal solution for large language model training and fine-tuning.

The Tesla P100 is an exceptional GPU that offers outstanding performance, memory capacity, and power efficiency. Its 16 GB of HBM2 memory and high-bandwidth interconnects make it an ideal solution for large language model training and fine-tuning. Benchmarks indicate that the Tesla P100 can deliver up to 10x speedup over CPU-only training for BERT, up to 5x speedup over CPU-only training for RoBERTA, and up to 4x speedup over CPU-only training for XLNet. This makes it an ideal solution for NLP researchers and practitioners who need to train and fine-tune large language models quickly and efficiently.

Why Choose the NVIDIA Tesla P100?

While the NVIDIA Tesla P100 may not be the latest and greatest GPU from NVIDIA, it remains a highly capable and attractive option for many use cases. Here are some compelling reasons why you might consider choosing the NVIDIA Tesla P100:

- Cost-Effective: The NVIDIA Tesla P100 is significantly cheaper than its newer counterparts, such as the V100 or A100. This makes it an attractive option for those on a budget who still need high-performance computing capabilities.

- Power Efficiency: Although the Tesla P100 may not match the power efficiency of newer GPUs, it still offers an attractive balance between performance and power consumption. This makes it suitable for datacenter deployments where energy costs are a concern.

- Software Support: NVIDIA continues to support the Tesla P100 with their Deep Learning SDKs and other software tools. This means that you can leverage the latest advancements in deep learning and AI research on this GPU, even if it's not the newest model available.

- Maturity of Ecosystem: The Tesla P100 has been around for several years, which means that the ecosystem surrounding this GPU is well-established. You'll find a wide range of software tools, frameworks, and libraries that are optimized for this GPU, making it easier to develop and deploy your applications.

-

Wide Range of Applications: The Tesla P100 is suitable for a wide range of applications, including:

- Deep learning and AI research

- Scientific simulations (e.g., climate modeling, fluid dynamics)

- Professional visualization (e.g., 3D modeling, rendering)

- High-performance computing (HPC) workloads

-

Flexibility: The Tesla P100 can be used in a variety of configurations, including:

- Single-GPU systems

- Multi-GPU systems (using NVLink or PCIe)

- Clusters and grids

- Proven Track Record: The Tesla P100 has been widely adopted in many industries, including research, scientific simulations, professional visualization, and more. It has a proven track record of delivering high-performance computing capabilities for a wide range of applications.

Who Should Consider the NVIDIA Tesla P100?

The NVIDIA Tesla P100 is suitable for anyone who needs high-performance computing capabilities, including:

- Researchers: Researchers in fields such as deep learning, AI, scientific simulations, and more.

- Datacenter Operators: Datacenter operators who need to deploy high-performance computing systems at scale.

- Professionals: Professionals in industries such as engineering, architecture, and video production.

- Developers: Developers of software applications that require high-performance computing capabilities.

The NVIDIA Tesla P100 offers a unique combination of performance, power efficiency, and cost-effectiveness that makes it an attractive option for many use cases. Its maturity of ecosystem, wide range of applications, flexibility, proven track record, and NVIDIA's warranty and support program make it a compelling choice for anyone who needs high-performance computing capabilities.

Conclusion

While the NVIDIA Tesla P100 may no longer be a flagship GPU, it remains a viable option for AI applications like deep learning and large language models. Its impressive performance, affordability, and software support make it an attractive choice for researchers, developers, and businesses on a budget.

If you're looking to accelerate your AI workloads without breaking the bank, consider giving the NVIDIA Tesla P100 a try. You might be surprised at what this older GPU can still deliver!

Check out eBay for the latest deals on P100s. At the time of this writing, you could get a unit for between $150 and $200.

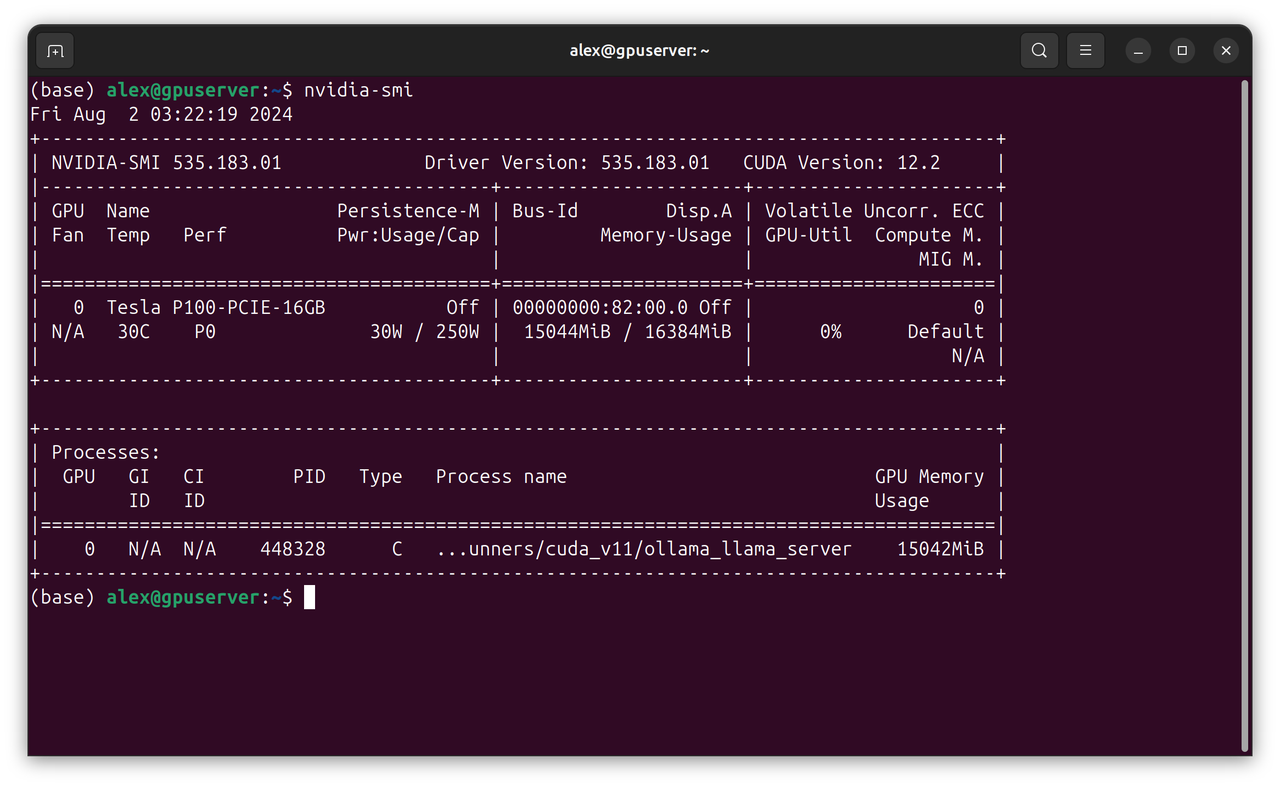

An NVIDIA Tesla P100 was used with Ollama (using Meta's Llama 3.1 70 billion parameters) Large Language Model (LLM) for some of the content of this blog entry. Stable Diffusion was also used to generate most of the graphics used in his blog entry, too.