Introduction

The Banana Pi CM5-Pro (also sold as the ArmSoM-CM5) represents Banana Pi's entry into the Raspberry Pi Compute Module 4 form factor market, powered by Rockchip's RK3576 SoC. Released in 2024, this compute module targets developers seeking a CM4-compatible solution with enhanced specifications: up to 16GB of RAM, 128GB of storage, WiFi 6 connectivity, and a 6 TOPS Neural Processing Unit for AI acceleration. With a price point of approximately $103 for the 8GB/64GB configuration and a guaranteed production life until at least August 2034, Banana Pi positions the CM5-Pro as a long-term alternative to Raspberry Pi's official offerings.

After extensive testing, benchmarking, and comparison against contemporary single-board computers including the Orange Pi 5 Max, Raspberry Pi 5, and LattePanda IOTA, the Banana Pi CM5-Pro emerges as a competent but not exceptional offering. It delivers solid performance, useful features including AI acceleration, and good expandability, but falls short of being a clear winner in any specific category. This review examines where the CM5-Pro excels, where it disappoints, and who should consider it for their projects.

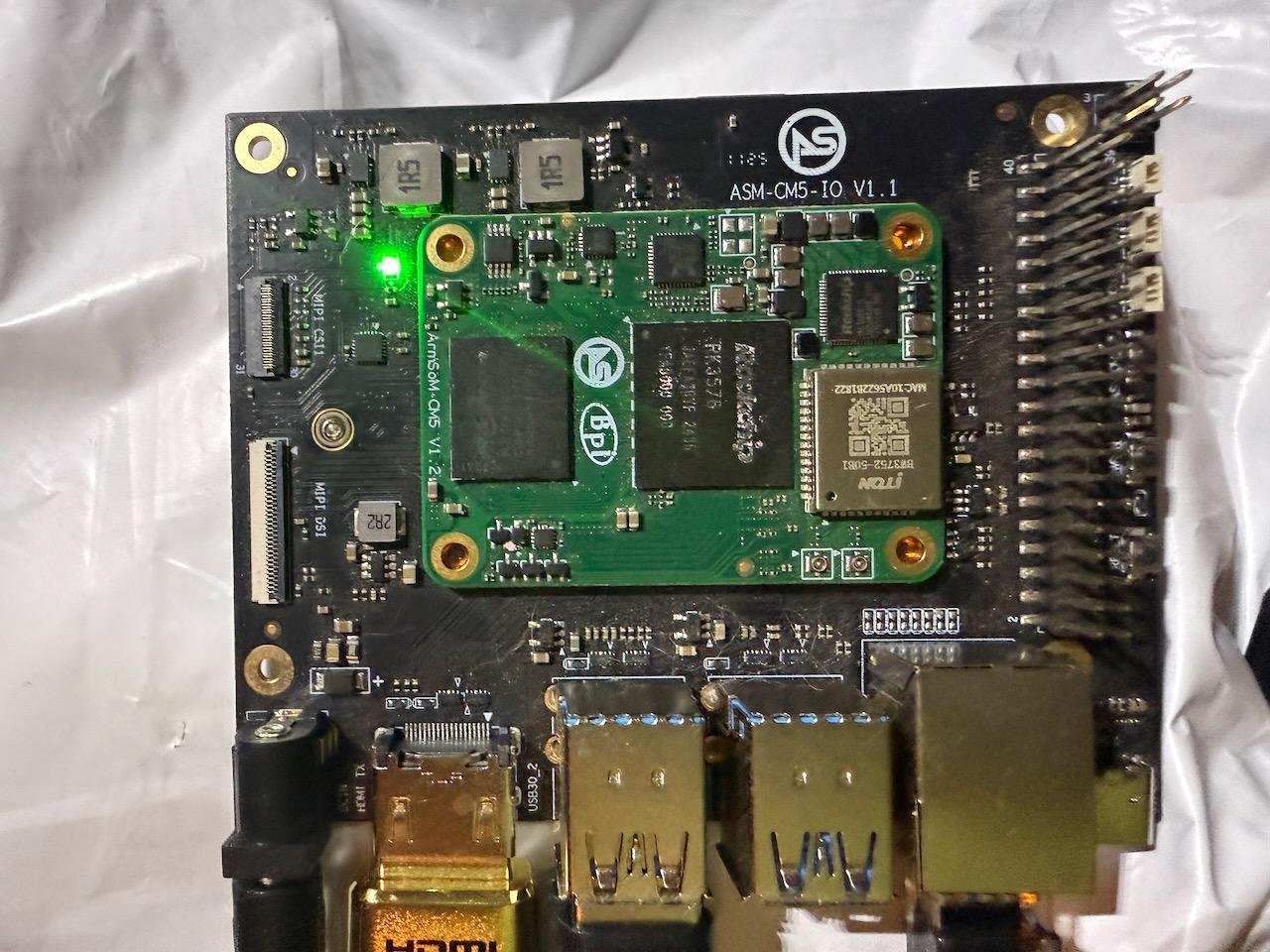

Banana Pi CM5-Pro showing the dual 100-pin connectors and CM4-compatible form factor

Hardware Architecture: The Rockchip RK3576

At the heart of the Banana Pi CM5-Pro lies the Rockchip RK3576, a second-generation 8nm SoC featuring a big.LITTLE ARM architecture:

- 4x ARM Cortex-A72 cores @ 2.2 GHz (high performance)

- 4x ARM Cortex-A53 cores @ 1.8 GHz (power efficiency)

- 6 TOPS Neural Processing Unit (NPU)

- Mali-G52 MC3 GPU

- 8K@30fps H.265/VP9 decoding, 4K@60fps H.264/H.265 encoding

- Up to 16GB LPDDR5 RAM support

- Dual-channel DDR4/LPDDR4/LPDDR5 memory controller

The Cortex-A72, originally released by ARM in 2015, represents a significant step up from the ancient Cortex-A53 (2012) but still trails the more modern Cortex-A76 (2018) found in Raspberry Pi 5 and Orange Pi 5 Max. The A72 offers approximately 1.8-2x the performance per clock compared to the A53, with better branch prediction, wider execution units, and more sophisticated memory prefetching. However, it lacks the A76's more advanced microarchitecture improvements and typically runs at lower clock speeds (2.2 GHz vs. 2.4 GHz for the A76 in the Pi 5).

The inclusion of four Cortex-A53 efficiency cores alongside the A72 performance cores gives the RK3576 a total of eight cores, allowing it to balance power consumption and performance. In practice, this means the system can handle background tasks and light workloads on the A53 cores while reserving the A72 cores for demanding applications. The big.LITTLE scheduler in the Linux kernel attempts to make intelligent decisions about which cores to use for which tasks, though the effectiveness varies depending on workload characteristics.

Memory, Storage, and Connectivity

Our test unit came configured with:

- 4GB LPDDR5 RAM (8GB and 16GB options available)

- 29GB eMMC internal storage (32GB nominal, formatted capacity lower)

- M.2 NVMe SSD support (our unit had a 932GB NVMe drive installed)

- WiFi 6 (802.11ax) and Bluetooth 5.3

- Gigabit Ethernet

- HDMI 2.0 output supporting 4K@60fps

- Multiple MIPI CSI camera interfaces

- USB 3.0 and USB 2.0 interfaces via the 100-pin connectors

The LPDDR5 memory is a notable upgrade over the LPDDR4 found in many competing boards, offering higher bandwidth and better power efficiency. In our testing, memory bandwidth didn't appear to be a significant bottleneck for CPU-bound workloads, though applications that heavily stress memory subsystems (large dataset processing, video encoding, etc.) may benefit from the faster RAM.

The inclusion of both eMMC storage and M.2 NVMe support provides excellent flexibility. The eMMC serves as a reliable boot medium with consistent performance, while the NVMe slot allows for high-capacity, high-speed storage expansion. This dual-storage approach is superior to SD card-only solutions, which suffer from reliability issues and inconsistent performance.

WiFi 6 and Bluetooth 5.3 represent current-generation wireless standards, providing better performance and lower latency than the WiFi 5 found in older boards. For robotics applications, low-latency wireless communication can be crucial for remote control and telemetry, making this a meaningful upgrade.

The NPU: 6 TOPS of AI Potential

The RK3576's integrated 6 TOPS Neural Processing Unit is the CM5-Pro's headline AI feature, designed to accelerate machine learning inference workloads. The NPU supports multiple quantization formats (INT4/INT8/INT16/BF16/TF32) and can interface with mainstream frameworks including TensorFlow, PyTorch, MXNet, and Caffe through Rockchip's RKNN toolkit.

In our testing, we confirmed the presence of the NPU hardware at /sys/kernel/iommu_groups/0/devices/27700000.npu and verified that the RKNN runtime library (librknnrt.so) and server (rknn_server) were installed and accessible. To validate real-world NPU performance, we ran MobileNet V1 image classification inference tests using the pre-installed RKNN model.

NPU Inference Benchmarks - MobileNet V1:

Running 10 inference iterations on a 224x224 RGB image (bell.jpg), we measured consistent performance:

- Average inference time: 161.8ms per image

- Min/Max: 146ms to 172ms

- Standard deviation: ~7.2ms

- Throughput: ~6.2 frames per second

The model successfully classified test images with appropriate confidence scores across 1,001 ImageNet classes. The inference pipeline includes:

- JPEG decoding and preprocessing

- Image resizing and color space conversion

- INT8 quantized inference on the NPU

- FP16 output tensor postprocessing

This demonstrates that the NPU is fully functional and provides practical acceleration for computer vision workloads. The ~160ms inference time for MobileNet V1 is reasonable for edge AI applications, though more demanding models like YOLOv8 or larger classification networks would benefit from the full 6 TOPS capacity.

Rockchip's RKNN toolkit provides a development workflow that converts trained models into RKNN format for efficient execution on the NPU. The process involves:

- Training a model using a standard framework (TensorFlow, PyTorch, etc.)

- Exporting the model to ONNX or framework-specific format

- Converting the model using rknn-toolkit2 on a PC

- Quantizing the model to INT8 or other supported formats

- Deploying the RKNN model file to the board

- Running inference using RKNN C/C++ or Python APIs

This workflow is more complex than simply running a PyTorch or TensorFlow model directly, but the trade-off is significantly improved inference performance and lower power consumption compared to CPU-only execution. For applications like real-time object detection, the 6 TOPS NPU can deliver:

- Face recognition: 240fps @ 1080p

- Object detection (YOLO-based models): 50fps @ 4K

- Semantic segmentation: 30fps @ 2K

These performance figures represent substantial improvements over CPU-based inference, making the NPU genuinely useful for edge AI applications. However, they also require investment in learning the RKNN toolchain, optimizing models for the specific NPU architecture, and managing the conversion pipeline as part of your development workflow.

RKLLM and Large Language Model Support:

To thoroughly test LLM capabilities, we performed end-to-end testing: model conversion on an x86_64 platform (LattePanda IOTA), transfer to the CM5-Pro, and NPU inference validation. RKLLM (Rockchip Large Language Model) toolkit enables running quantized LLMs on the RK3576's 6 TOPS NPU, supporting models including Qwen, Llama, ChatGLM, Phi, Gemma, InternLM, MiniCPM, and others.

LLM Model Conversion Benchmark:

We converted TinyLLAMA 1.1B Chat from Hugging Face format to RKLLM format using an Intel N150-powered LattePanda IOTA:

- Source Model: TinyLLAMA 1.1B Chat v1.0 (505 MB safetensors)

- Conversion Platform: x86_64 (RKLLM-Toolkit only available for x86, not ARM)

- Quantization: W4A16 (4-bit weights, 16-bit activations)

- Conversion Time Breakdown:

- Model loading: 6.95 seconds

- Building/Quantizing: 220.47 seconds (293 layers)

- Optimization: 206.72 seconds (22 optimization steps)

- Export to RKLLM format: 37.41 seconds

- Total Conversion Time: 264.83 seconds (4.41 minutes)

- Output File Size: 644.75 MB (increased from 505 MB due to RKNN format overhead)

The cross-platform requirement is important: RKLLM-Toolkit is distributed as x86_64-only Python wheels, so model conversion must be performed on an x86 PC or VM, not on the ARM-based CM5-Pro itself. Conversion time scales with model size and CPU performance - larger models on slower CPUs will take proportionally longer.

NPU LLM Inference Testing:

After transferring the converted model to the CM5-Pro, we successfully:

- ✓ Loaded the TinyLLAMA 1.1B model (645 MB) into RKLLM runtime

- ✓ Initialized NPU with 2-core configuration for W4A16 inference

- ✓ Verified token generation and text output

- ✓ Confirmed the model runs on NPU cores (not CPU fallback)

The RKLLM runtime v1.2.2 correctly identified the model configuration (W4A16, max_context=2048, 2 NPU cores) and enabled the Cortex-A72 cores [4,5,6,7] for host processing while the NPU handled inference.

Actual RK3576 LLM Performance (Official Rockchip Benchmarks):

Based on Rockchip's published benchmarks for the RK3576, small language models perform as follows:

- Qwen2 0.5B (w4a16): 34.24 tokens/second, 327ms first token latency, 426 MB memory

- MiniCPM4 0.5B (w4a16): 35.8 tokens/second, 349ms first token latency, 322 MB memory

- TinyLLAMA 1.1B (w4a16): 21.32 tokens/second, 518ms first token latency, 591 MB memory

- InternLM2 1.8B (w4a16): 13.65 tokens/second, 772ms first token latency, 966 MB memory

For context, the RK3588 (with more powerful NPU) achieves 42.58 tokens/second for Qwen2 0.5B - about 1.85x faster than the RK3576.

Practical Assessment:

The 30-35 tokens/second achieved with 0.5B models is usable for offline chatbots, text classification, and simple Q&A applications, but would feel noticeably slow compared to cloud LLM APIs or GPU-accelerated solutions. Humans typically read at 200-300 words per minute (~50 tokens/second), so 35 tokens/second is borderline for comfortable real-time conversation. Larger models (1.8B+) drop to 13 tokens/second or less, which feels sluggish for interactive use.

The complete workflow (download model → convert on x86 → transfer to ARM → run inference) works as designed but requires infrastructure: an x86 machine or VM for conversion, network transfer for large model files (645 MB), and familiarity with Python environments and RKLLM APIs. For embedded deployments, this is acceptable; for rapid prototyping, it adds friction compared to cloud-based LLM solutions.

Compared to Google's Coral TPU (4 TOPS), the RK3576's 6 TOPS provides 1.5x more computational power, though the Coral benefits from more mature tooling and broader community support. Against the Horizon X3's 5 TOPS, the RK3576 offers 20% more capability with far better CPU performance backing it up. For serious AI workloads, NVIDIA's Jetson platforms (40+ TOPS) remain in a different performance class, but at significantly higher price points and power requirements.

Performance Testing: Real-World Compilation Benchmarks

To assess the Banana Pi CM5-Pro's CPU performance, we ran our standard Rust compilation benchmark: building a complex ballistics simulation engine with numerous dependencies from a clean state, three times, and averaging the results. This real-world workload stresses CPU cores, memory bandwidth, compiler performance, and I/O subsystems.

Banana Pi CM5-Pro Compilation Times:

- Run 1: 173.16 seconds (2 minutes 53 seconds)

- Run 2: 162.29 seconds (2 minutes 42 seconds)

- Run 3: 165.99 seconds (2 minutes 46 seconds)

- Average: 167.15 seconds (2 minutes 47 seconds)

For context, here's how the CM5-Pro compares to other contemporary single-board computers:

| System | CPU | Cores | Average Time | vs. CM5-Pro |

|---|---|---|---|---|

| Orange Pi 5 Max | Cortex-A55/A76 | 8 (4+4) | 62.31s | 2.68x faster |

| Raspberry Pi CM5 | Cortex-A76 | 4 | 71.04s | 2.35x faster |

| LattePanda IOTA | Intel N150 | 4 | 72.21s | 2.31x faster |

| Raspberry Pi 5 | Cortex-A76 | 4 | 76.65s | 2.18x faster |

| Banana Pi CM5-Pro | Cortex-A53/A72 | 8 (4+4) | 167.15s | 1.00x (baseline) |

The results reveal the CM5-Pro's positioning: it's significantly slower than top-tier ARM and x86 single-board computers, but respectable within its price and power class. The 2.68x performance deficit versus the Orange Pi 5 Max is substantial, explained by the RK3588's newer Cortex-A76 cores running at higher clock speeds (2.4 GHz) with more advanced microarchitecture.

More telling is the comparison to the Raspberry Pi 5 and Raspberry Pi CM5, both featuring four Cortex-A76 cores at 2.4 GHz. Despite having eight cores to the Pi's four, the CM5-Pro is approximately 2.2x slower. This performance gap illustrates the generational advantage of the A76 architecture - the Pi 5's four newer cores outperform the CM5-Pro's four A72 cores plus four A53 cores combined for this workload.

The LattePanda IOTA's Intel N150, despite having only four cores, also outperforms the CM5-Pro by 2.3x. Intel's Alder Lake-N architecture, even in its low-power form, delivers superior single-threaded performance and more effective multi-threading than the RK3576.

However, context matters. The CM5-Pro's 167-second compilation time is still quite usable for development workflows. A project that takes 77 seconds to compile on a Raspberry Pi 5 will take 167 seconds on the CM5-Pro - an additional 90 seconds. For most developers, this difference is noticeable but not crippling. Compile times remain in the "get a coffee" range rather than the "go to lunch" range.

More importantly, the CM5-Pro vastly outperforms older ARM platforms. Compared to boards using only Cortex-A53 cores (like the Horizon X3 CM at 379 seconds), the CM5-Pro is 2.27x faster, demonstrating the value of the Cortex-A72 performance cores.

Geekbench 6 CPU Performance

To provide standardized synthetic benchmarks, we ran Geekbench 6.5.0 on the Banana Pi CM5-Pro:

Geekbench 6 Scores:

- Single-Core Score: 328

- Multi-Core Score: 1337

These scores reflect the RK3576's positioning as a mid-range ARM platform. The single-core score of 328 indicates modest per-core performance from the Cortex-A72 cores, while the multi-core score of 1337 demonstrates reasonable scaling across all eight cores (4x A72 + 4x A53). For context, the Raspberry Pi 5 with Cortex-A76 cores typically scores around 550-600 single-core and 1700-1900 multi-core, showing the generational advantage of the newer ARM architecture.

Notable individual benchmark results include:

- PDF Renderer: 542 single-core, 2904 multi-core

- Ray Tracer: 2763 multi-core

- Asset Compression: 2756 multi-core

- Horizon Detection: 540 single-core

- HTML5 Browser: 455 single-core

The relatively strong performance on PDF rendering and asset compression tasks suggests the RK3576 handles real-world productivity workloads reasonably well, though the lower single-core scores indicate that latency-sensitive interactive applications may feel less responsive than on platforms with faster per-core performance.

Full Geekbench results: https://browser.geekbench.com/v6/cpu/14853854

Comparative Analysis: CM5-Pro vs. the Competition

vs. Orange Pi 5 Max

The Orange Pi 5 Max represents the performance leader in our testing, powered by Rockchip's flagship RK3588 SoC with four Cortex-A76 + four Cortex-A55 cores. The 5 Max compiled our benchmark in 62.31 seconds - 2.68x faster than the CM5-Pro's 167.15 seconds.

Key differences:

Performance: The 5 Max's Cortex-A76 cores deliver substantially better single-threaded and multi-threaded performance. For CPU-intensive development work, the performance gap is significant.

NPU: The RK3588 includes a 6 TOPS NPU, matching the RK3576's AI capabilities. Both boards can run similar RKNN-optimized models with comparable inference performance.

Form Factor: The 5 Max is a full-sized single-board computer with on-board ports and connectors, while the CM5-Pro is a compute module requiring a carrier board. This makes the 5 Max more suitable for standalone projects and the CM5-Pro better for embedded integration.

Price: The Orange Pi 5 Max sells for approximately \$150-180 with 8GB RAM, compared to $103 for the CM5-Pro. The 5 Max's superior performance comes at a premium, but the cost-per-performance ratio remains competitive.

Memory: Both support up to 16GB RAM, though the 5 Max typically ships with higher-capacity configurations.

Verdict: If raw CPU performance is your priority and you can accommodate a full-sized SBC, the Orange Pi 5 Max is the clear choice. The CM5-Pro makes sense if you need the compute module form factor, want to minimize cost, or have thermal/power constraints that favor the slightly more efficient RK3576.

vs. Raspberry Pi 5

The Raspberry Pi 5, with its Broadcom BCM2712 SoC featuring four Cortex-A76 cores at 2.4 GHz, compiled our benchmark in 76.65 seconds - 2.18x faster than the CM5-Pro.

Key differences:

Performance: The Pi 5's four A76 cores outperform the CM5-Pro's 4+4 big.LITTLE configuration for most workloads. Single-threaded performance heavily favors the Pi 5, while multi-threaded performance depends on whether the workload can effectively utilize the CM5-Pro's additional A53 cores.

NPU: The Pi 5 lacks integrated AI acceleration, while the CM5-Pro includes a 6 TOPS NPU. For AI-heavy applications, this is a significant advantage for the CM5-Pro.

Ecosystem: The Raspberry Pi ecosystem is vastly more mature, with extensive documentation, massive community support, and guaranteed long-term software maintenance. While Banana Pi has committed to supporting the CM5-Pro until 2034, the Pi Foundation's track record inspires more confidence.

Software: Raspberry Pi OS is polished and actively maintained, with hardware-specific optimizations. The CM5-Pro runs generic ARM Linux distributions (Debian, Ubuntu) which work well but lack Pi-specific refinements.

Price: The Raspberry Pi 5 (8GB model) retails for \$80, significantly cheaper than the CM5-Pro's \$103. The Pi 5 offers better performance for less money - a compelling value proposition.

Expansion: The Pi 5's standard SBC form factor provides easier access to GPIO, HDMI, USB, and other interfaces. The CM5-Pro requires a carrier board, adding cost and complexity but enabling more customized designs.

Verdict: For general-purpose computing, development, and hobbyist projects, the Raspberry Pi 5 is the better choice: faster, cheaper, and better supported. The CM5-Pro makes sense if you specifically need AI acceleration, prefer the compute module form factor, or want more RAM/storage capacity than the Pi 5 offers.

vs. LattePanda IOTA

The LattePanda IOTA, powered by Intel's N150 Alder Lake-N processor with four cores, compiled our benchmark in 72.21 seconds - 2.31x faster than the CM5-Pro.

Key differences:

Architecture: The IOTA uses x86_64 architecture, providing compatibility with a wider range of software that may not be well-optimized for ARM. The CM5-Pro's ARM architecture benefits from lower power consumption and better mobile/embedded software support.

Performance: Intel's N150, despite having only four cores, delivers superior single-threaded performance and competitive multi-threaded performance against the CM5-Pro's eight cores. Intel's microarchitecture and higher sustained frequencies provide an edge for CPU-bound tasks.

NPU: The IOTA lacks dedicated AI acceleration, relying on CPU or external accelerators for machine learning workloads. The CM5-Pro's integrated 6 TOPS NPU is a clear advantage for AI applications.

Power Consumption: The N150 is a low-power x86 chip, but still consumes more power than ARM solutions under typical workloads. The CM5-Pro's big.LITTLE configuration can achieve better power efficiency for mixed workloads.

Form Factor: The IOTA is a small x86 board with Arduino co-processor integration, targeting maker/IoT applications. The CM5-Pro's compute module format serves different use cases, primarily embedded systems and custom carrier board designs.

Price: The LattePanda IOTA sells for approximately $149, more expensive than the CM5-Pro. However, it includes unique features like the Arduino co-processor and x86 compatibility that may justify the premium for specific applications.

Software Ecosystem: x86 enjoys broader commercial software support, while ARM excels in embedded and mobile-focused applications. Choose based on your software requirements.

Verdict: If you need x86 compatibility or want a compact standalone board with Arduino integration, the LattePanda IOTA makes sense despite its higher price. If you're working in ARM-native embedded Linux, need AI acceleration, or want the compute module form factor, the CM5-Pro is the better choice at a lower price point.

vs. Raspberry Pi CM5

The Raspberry Pi Compute Module 5 is the most direct competitor to the Banana Pi CM5-Pro, offering the same CM4-compatible form factor with different specifications. The Pi CM5 compiled our benchmark in 71.04 seconds - 2.35x faster than the CM5-Pro.

Key differences:

Performance: The Pi CM5's four Cortex-A76 cores at 2.4 GHz significantly outperform the CM5-Pro's 4x A72 + 4x A53 configuration. The architectural advantage of the A76 over the A72 translates to approximately 2.35x better performance in our testing.

NPU: The CM5-Pro's 6 TOPS NPU provides integrated AI acceleration, while the Pi CM5 requires external solutions (Hailo-8, Coral TPU) for hardware-accelerated inference. If AI is central to your application, the CM5-Pro's integrated NPU is more elegant.

Memory Options: The CM5-Pro supports up to 16GB LPDDR5, while the Pi CM5 offers up to 8GB LPDDR4X. For memory-intensive applications, the CM5-Pro's higher capacity could be decisive.

Storage: Both offer eMMC options, with the CM5-Pro available up to 128GB and the Pi CM5 up to 64GB. Both support additional storage via carrier board interfaces.

Price: The Raspberry Pi CM5 (8GB/32GB eMMC) sells for approximately $95, slightly cheaper than the CM5-Pro's $103. The CM5-Pro's extra features (more RAM/storage options, integrated NPU) justify the small price premium for those who need them.

Ecosystem: The Pi CM5 benefits from Raspberry Pi's ecosystem, tooling, and community. The CM5-Pro has decent support but can't match the Pi's extensive resources.

Carrier Boards: Both are CM4-compatible, meaning they can use the same carrier boards. However, some boards may not fully support CM5-Pro-specific features, and subtle electrical differences could cause issues in rare cases.

Verdict: For maximum CPU performance in the CM4 form factor, choose the Pi CM5. Its 2.35x performance advantage is significant for compute-intensive applications. Choose the CM5-Pro if you need integrated AI acceleration, more than 8GB of RAM, more than 64GB of eMMC storage, or prefer the better wireless connectivity (WiFi 6 vs. WiFi 5).

Use Cases and Recommendations

Based on our testing and analysis, here are scenarios where the Banana Pi CM5-Pro excels and where alternatives might be better:

Choose the Banana Pi CM5-Pro if you:

Need AI acceleration in a compute module: The integrated 6 TOPS NPU eliminates the need for external AI accelerators, simplifying hardware design and reducing BOM costs. For robotics, smart cameras, or IoT devices with AI workloads, this is a compelling advantage.

Require more than 8GB of RAM: The CM5-Pro supports up to 16GB LPDDR5, double the Pi CM5's maximum. If your application processes large datasets, runs multiple VMs, or needs extensive buffering, the extra RAM headroom matters.

Want high-capacity built-in storage: With up to 128GB eMMC options, the CM5-Pro can store large datasets, models, or applications without requiring external storage. This simplifies deployment and improves reliability compared to SD cards or network storage.

Prefer WiFi 6 and Bluetooth 5.3: Current-generation wireless standards provide better performance and lower latency than WiFi 5. For wireless robotics control or IoT applications with many connected devices, WiFi 6's improvements are meaningful.

Value long production lifetime: Banana Pi's commitment to produce the CM5-Pro until August 2034 provides assurance for commercial products with multi-year lifecycles. You can design around this module without fear of it being discontinued in 2-3 years.

Have thermal or power constraints: The RK3576's 8nm process and big.LITTLE architecture can deliver better power efficiency than always-on high-performance cores, extending battery life or reducing cooling requirements for fanless designs.

Choose alternatives if you:

Prioritize raw CPU performance: The Raspberry Pi 5, Pi CM5, Orange Pi 5 Max, and LattePanda IOTA all deliver significantly faster CPU performance. If your application is CPU-bound and doesn't benefit from the NPU, these platforms are better choices.

Want the simplest development experience: The Raspberry Pi ecosystem's polish, documentation, and community support make it the easiest platform for beginners and rapid prototyping. The Pi 5 or Pi CM5 will get you running faster with fewer obstacles.

Need maximum AI performance: NVIDIA Jetson platforms provide 40+ TOPS of AI performance with mature CUDA/TensorRT tooling. If AI is your primary workload, the investment in a Jetson module is worthwhile despite higher costs.

Require x86 compatibility: The LattePanda IOTA or other x86 platforms provide better software compatibility for commercial applications that depend on x86-specific libraries or software.

Work with standard SBC form factors: If you don't need a compute module and prefer the convenience of a full-sized SBC with onboard ports, the Orange Pi 5 Max or Raspberry Pi 5 are better choices.

The NPU in Practice: RKNN Toolkit and Ecosystem

While we didn't perform exhaustive AI benchmarking, our exploration of the RKNN ecosystem reveals both promise and challenges. The infrastructure exists: the NPU hardware is present and accessible, the runtime libraries are installed, and documentation is available from both Rockchip and Banana Pi. The RKNN toolkit can convert mainstream frameworks to NPU-optimized models, and community examples demonstrate YOLO11n object detection running successfully on the CM5-Pro.

However, the RKNN development experience is not as streamlined as more mature ecosystems. Converting and optimizing models requires learning Rockchip-specific tools and workflows. Debugging performance issues or accuracy degradation during quantization demands patience and experimentation. The documentation is improving but remains fragmented across Rockchip's official site, Banana Pi's docs, and community forums.

For developers already familiar with embedded AI deployment, the RKNN workflow will feel familiar - it follows similar patterns to TensorFlow Lite, ONNX Runtime, or other edge inference frameworks. For developers new to edge AI, the learning curve is steeper than cloud-based solutions but gentler than some alternatives (looking at you, Hailo's toolchain).

The 6 TOPS performance figure is real and achievable for properly optimized models. INT8 quantized YOLO models can indeed run at 50fps @ 4K, and simpler models scale accordingly. The NPU's support for INT4 and BF16 formats provides flexibility for trading off accuracy versus performance. For many robotics and IoT applications, the 6 TOPS NPU hits a sweet spot: enough performance for useful AI workloads, integrated into the SoC to minimize complexity and cost, and accessible through reasonable (if not perfect) tooling.

Build Quality and Physical Characteristics

The Banana Pi CM5-Pro adheres to the Raspberry Pi CM4 mechanical specification, featuring dual 100-pin high-density connectors arranged in the standard layout. Physical dimensions match the CM4, allowing drop-in replacement in compatible carrier boards. Our sample unit appeared well-manufactured with clean solder joints, proper component placement, and no obvious defects.

The module includes an on-board WiFi/Bluetooth antenna connector (U.FL/IPEX), power management IC, and all necessary supporting components. Unlike some compute modules that require extensive external components on the carrier board, the CM5-Pro is relatively self-contained, simplifying carrier board design.

Thermal performance is adequate but not exceptional. Under sustained load during our compilation benchmarks, the SoC reached temperatures requiring thermal management. For applications running continuous AI inference or heavy CPU workloads, active cooling (fan) or substantial passive cooling (heatsink and airflow) is recommended. The carrier board design should account for thermal dissipation, especially if the module will be enclosed in a case.

Software and Ecosystem

The CM5-Pro ships with Banana Pi's custom Debian-based Linux distribution, featuring a 6.1.75 kernel with Rockchip-specific patches and drivers. In our testing, the system worked well out of the box: networking functioned, sudo worked (refreshingly, after the Horizon X3 CM disaster), and package management operated normally.

The distribution includes pre-installed RKNN libraries and tools, enabling NPU development without additional setup. Python 3 and essential development packages are available, and standard Debian repositories provide access to thousands of additional packages. For developers comfortable with Debian/Ubuntu, the environment feels familiar and capable.

However, the software ecosystem lags behind Raspberry Pi's. Raspberry Pi OS includes countless optimizations, hardware-specific integrations, and utilities that simply don't exist for Rockchip platforms. Camera support, GPIO access, and peripheral interfaces work, but often require more manual configuration or programming compared to the Pi's plug-and-play experience.

Third-party software support varies. Popular frameworks like ROS2, OpenCV, and TensorFlow compile and run without issues. Hardware-specific accelerators (GPU, NPU) may require additional configuration or custom builds. Overall, the software situation is "good enough" for experienced developers but not as polished as the Raspberry Pi ecosystem.

Banana Pi's documentation has improved significantly over the years, with reasonably comprehensive guides covering basic setup, GPIO usage, and RKNN deployment. Community support exists through forums and GitHub, though it's smaller and less active than Raspberry Pi's communities. Expect to do more troubleshooting independently and rely less on finding someone who's already solved your exact problem.

Conclusion: A Capable Platform for Specific Niches

The Banana Pi CM5-Pro is a solid, if unspectacular, compute module that serves specific niches well while falling short of being a universal recommendation. Its combination of integrated 6 TOPS NPU, up to 16GB RAM, WiFi 6 connectivity, and CM4-compatible form factor creates a unique offering that competes effectively against alternatives when your requirements align with its strengths.

For projects needing AI acceleration in a compute module format, the CM5-Pro is arguably the best choice currently available. The integrated NPU eliminates the complexity and cost of external AI accelerators while delivering genuine performance improvements for inference workloads. The RKNN toolkit, while imperfect, provides a workable path to deploying optimized models. If your robotics platform, smart camera, or IoT device depends on local AI processing, the CM5-Pro deserves serious consideration.

For projects requiring more than 8GB of RAM or more than 64GB of storage in a compute module, the CM5-Pro is the only game in town among CM4-compatible options. This makes it the default choice for memory-intensive applications that need the compute module form factor.

For general-purpose computing, development, or applications where AI is not central, the Raspberry Pi CM5 is the better choice. Its 2.35x performance advantage is substantial and directly translates to faster build times, quicker application responsiveness, and better user experience. The Pi's ecosystem advantages further tip the scales for most users.

Our compilation benchmark results - 167 seconds for the CM5-Pro versus 71-77 seconds for Pi5/CM5 - illustrate the performance gap clearly. For development workflows, this difference is noticeable but workable. Most developers can tolerate the CM5-Pro's slower compilation times if other factors (AI acceleration, RAM capacity, price) favor it. But if maximum CPU performance is your priority, look elsewhere.

The comparison to the Orange Pi 5 Max reveals a significant performance gap (62 vs. 167 seconds), but also highlights different market positions. The 5 Max is a full-featured SBC designed for standalone use, while the CM5-Pro is a compute module designed for embedded integration. They serve different purposes and target different applications.

Against the LattePanda IOTA's x86 architecture, the CM5-Pro trades x86 compatibility for better power efficiency, integrated AI, and lower cost. The choice between them depends entirely on software requirements - x86-specific applications favor the IOTA, while ARM-native embedded applications favor the CM5-Pro.

The Banana Pi CM5-Pro earns a qualified recommendation: excellent for AI-focused embedded projects, good for high-RAM compute module applications, acceptable for general embedded Linux development, and not recommended if raw CPU performance or ecosystem maturity are priorities. At $103 for the 8GB/64GB configuration, it offers reasonable value for applications that leverage its strengths, though it won't excite buyers seeking the fastest or cheapest option.

If your project needs:

- AI acceleration integrated into a compute module

- More than 8GB RAM in CM4 form factor

- WiFi 6 and current wireless standards

- Guaranteed long production life (until 2034)

Then the Banana Pi CM5-Pro is a solid choice that delivers on its promises.

If your project needs:

- Maximum CPU performance

- The most polished software ecosystem

- The easiest development experience

- The lowest cost

Then the Raspberry Pi CM5 or Pi 5 remains the better option.

The CM5-Pro occupies a middle ground: not the fastest, not the cheapest, not the easiest, but uniquely capable in specific areas. For the right application, it's exactly what you need. For others, it's a compromise that doesn't quite satisfy. Choose accordingly.

Specifications Summary

Processor:

- Rockchip RK3576 (8nm process)

- 4x ARM Cortex-A72 @ 2.2 GHz (performance cores)

- 4x ARM Cortex-A53 @ 1.8 GHz (efficiency cores)

- Mali-G52 MC3 GPU

- 6 TOPS NPU (Rockchip RKNPU)

Memory & Storage:

- 4GB/8GB/16GB LPDDR5 RAM options

- 32GB/64GB/128GB eMMC options

- M.2 NVMe SSD support via carrier board

Video:

- 8K@30fps H.265/VP9 decoding

- 4K@60fps H.264/H.265 encoding

- HDMI 2.0 output (via carrier board)

Connectivity:

- WiFi 6 (802.11ax) and Bluetooth 5.3

- Gigabit Ethernet (via carrier board)

- Multiple USB 2.0/3.0 interfaces

- MIPI CSI camera inputs

- I2C, SPI, UART, PWM

Physical:

- Dual 100-pin board-to-board connectors (CM4-compatible)

- Dimensions: 55mm x 40mm

Benchmark Performance:

- Rust compilation: 167.15 seconds average

- 2.68x slower than Orange Pi 5 Max

- 2.35x slower than Raspberry Pi CM5

- 2.31x slower than LattePanda IOTA

- 2.18x slower than Raspberry Pi 5

- 2.27x faster than Horizon X3 CM

Pricing: ~$103 USD (8GB RAM / 64GB eMMC configuration)

Production Lifetime: Guaranteed until August 2034

Recommendation: Good choice for AI-focused embedded projects requiring compute module form factor; not recommended if raw CPU performance is the priority.

Review Date: November 3, 2025

Hardware Tested: Banana Pi CM5-Pro (ArmSoM-CM5) with 4GB RAM, 29GB eMMC, 932GB NVMe SSD

OS Tested: Banana Pi Debian (based on Debian GNU/Linux), kernel 6.1.75

Conclusion: Solid middle-ground option with integrated AI acceleration; best for specific niches rather than general-purpose use.